Assignment 3

Contents

Assignment 3#

%pip install pymc pytensor

Requirement already satisfied: pymc in /usr/local/lib/python3.10/dist-packages (5.10.4)

Requirement already satisfied: pytensor in /usr/local/lib/python3.10/dist-packages (2.18.6)

Requirement already satisfied: arviz>=0.13.0 in /usr/local/lib/python3.10/dist-packages (from pymc) (0.15.1)

Requirement already satisfied: cachetools>=4.2.1 in /usr/local/lib/python3.10/dist-packages (from pymc) (5.3.3)

Requirement already satisfied: cloudpickle in /usr/local/lib/python3.10/dist-packages (from pymc) (2.2.1)

Requirement already satisfied: fastprogress>=0.2.0 in /usr/local/lib/python3.10/dist-packages (from pymc) (1.0.3)

Requirement already satisfied: numpy>=1.15.0 in /usr/local/lib/python3.10/dist-packages (from pymc) (1.25.2)

Requirement already satisfied: pandas>=0.24.0 in /usr/local/lib/python3.10/dist-packages (from pymc) (1.5.3)

Requirement already satisfied: scipy>=1.4.1 in /usr/local/lib/python3.10/dist-packages (from pymc) (1.11.4)

Requirement already satisfied: typing-extensions>=3.7.4 in /usr/local/lib/python3.10/dist-packages (from pymc) (4.10.0)

Requirement already satisfied: setuptools>=48.0.0 in /usr/local/lib/python3.10/dist-packages (from pytensor) (67.7.2)

Requirement already satisfied: filelock in /usr/local/lib/python3.10/dist-packages (from pytensor) (3.13.1)

Requirement already satisfied: etuples in /usr/local/lib/python3.10/dist-packages (from pytensor) (0.3.9)

Requirement already satisfied: logical-unification in /usr/local/lib/python3.10/dist-packages (from pytensor) (0.4.6)

Requirement already satisfied: miniKanren in /usr/local/lib/python3.10/dist-packages (from pytensor) (1.0.3)

Requirement already satisfied: cons in /usr/local/lib/python3.10/dist-packages (from pytensor) (0.4.6)

Requirement already satisfied: matplotlib>=3.2 in /usr/local/lib/python3.10/dist-packages (from arviz>=0.13.0->pymc) (3.7.1)

Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from arviz>=0.13.0->pymc) (23.2)

Requirement already satisfied: xarray>=0.21.0 in /usr/local/lib/python3.10/dist-packages (from arviz>=0.13.0->pymc) (2023.7.0)

Requirement already satisfied: h5netcdf>=1.0.2 in /usr/local/lib/python3.10/dist-packages (from arviz>=0.13.0->pymc) (1.3.0)

Requirement already satisfied: xarray-einstats>=0.3 in /usr/local/lib/python3.10/dist-packages (from arviz>=0.13.0->pymc) (0.7.0)

Requirement already satisfied: python-dateutil>=2.8.1 in /usr/local/lib/python3.10/dist-packages (from pandas>=0.24.0->pymc) (2.8.2)

Requirement already satisfied: pytz>=2020.1 in /usr/local/lib/python3.10/dist-packages (from pandas>=0.24.0->pymc) (2023.4)

Requirement already satisfied: toolz in /usr/local/lib/python3.10/dist-packages (from logical-unification->pytensor) (0.12.1)

Requirement already satisfied: multipledispatch in /usr/local/lib/python3.10/dist-packages (from logical-unification->pytensor) (1.0.0)

Requirement already satisfied: h5py in /usr/local/lib/python3.10/dist-packages (from h5netcdf>=1.0.2->arviz>=0.13.0->pymc) (3.9.0)

Requirement already satisfied: contourpy>=1.0.1 in /usr/local/lib/python3.10/dist-packages (from matplotlib>=3.2->arviz>=0.13.0->pymc) (1.2.0)

Requirement already satisfied: cycler>=0.10 in /usr/local/lib/python3.10/dist-packages (from matplotlib>=3.2->arviz>=0.13.0->pymc) (0.12.1)

Requirement already satisfied: fonttools>=4.22.0 in /usr/local/lib/python3.10/dist-packages (from matplotlib>=3.2->arviz>=0.13.0->pymc) (4.49.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /usr/local/lib/python3.10/dist-packages (from matplotlib>=3.2->arviz>=0.13.0->pymc) (1.4.5)

Requirement already satisfied: pillow>=6.2.0 in /usr/local/lib/python3.10/dist-packages (from matplotlib>=3.2->arviz>=0.13.0->pymc) (9.4.0)

Requirement already satisfied: pyparsing>=2.3.1 in /usr/local/lib/python3.10/dist-packages (from matplotlib>=3.2->arviz>=0.13.0->pymc) (3.1.1)

Requirement already satisfied: six>=1.5 in /usr/local/lib/python3.10/dist-packages (from python-dateutil>=2.8.1->pandas>=0.24.0->pymc) (1.16.0)

import pymc as pm

import numpy as np

import pandas as pd

from sklearn.datasets import fetch_california_housing

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression #for standard linear regression

from sklearn.metrics import mean_squared_error

from sklearn.preprocessing import StandardScaler

California Housing Dataset#

# Load California housing data

housing = fetch_california_housing()

X, y = housing.data, housing.target

housing

{'data': array([[ 8.3252 , 41. , 6.98412698, ..., 2.55555556,

37.88 , -122.23 ],

[ 8.3014 , 21. , 6.23813708, ..., 2.10984183,

37.86 , -122.22 ],

[ 7.2574 , 52. , 8.28813559, ..., 2.80225989,

37.85 , -122.24 ],

...,

[ 1.7 , 17. , 5.20554273, ..., 2.3256351 ,

39.43 , -121.22 ],

[ 1.8672 , 18. , 5.32951289, ..., 2.12320917,

39.43 , -121.32 ],

[ 2.3886 , 16. , 5.25471698, ..., 2.61698113,

39.37 , -121.24 ]]),

'target': array([4.526, 3.585, 3.521, ..., 0.923, 0.847, 0.894]),

'frame': None,

'target_names': ['MedHouseVal'],

'feature_names': ['MedInc',

'HouseAge',

'AveRooms',

'AveBedrms',

'Population',

'AveOccup',

'Latitude',

'Longitude'],

'DESCR': '.. _california_housing_dataset:\n\nCalifornia Housing dataset\n--------------------------\n\n**Data Set Characteristics:**\n\n :Number of Instances: 20640\n\n :Number of Attributes: 8 numeric, predictive attributes and the target\n\n :Attribute Information:\n - MedInc median income in block group\n - HouseAge median house age in block group\n - AveRooms average number of rooms per household\n - AveBedrms average number of bedrooms per household\n - Population block group population\n - AveOccup average number of household members\n - Latitude block group latitude\n - Longitude block group longitude\n\n :Missing Attribute Values: None\n\nThis dataset was obtained from the StatLib repository.\nhttps://www.dcc.fc.up.pt/~ltorgo/Regression/cal_housing.html\n\nThe target variable is the median house value for California districts,\nexpressed in hundreds of thousands of dollars ($100,000).\n\nThis dataset was derived from the 1990 U.S. census, using one row per census\nblock group. A block group is the smallest geographical unit for which the U.S.\nCensus Bureau publishes sample data (a block group typically has a population\nof 600 to 3,000 people).\n\nA household is a group of people residing within a home. Since the average\nnumber of rooms and bedrooms in this dataset are provided per household, these\ncolumns may take surprisingly large values for block groups with few households\nand many empty houses, such as vacation resorts.\n\nIt can be downloaded/loaded using the\n:func:`sklearn.datasets.fetch_california_housing` function.\n\n.. topic:: References\n\n - Pace, R. Kelley and Ronald Barry, Sparse Spatial Autoregressions,\n Statistics and Probability Letters, 33 (1997) 291-297\n'}

# Split the data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Standardize the features

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

#X_train_scaled = X_train

#X_test_scaled = X_test

print(np.shape(X_test))

(4128, 8)

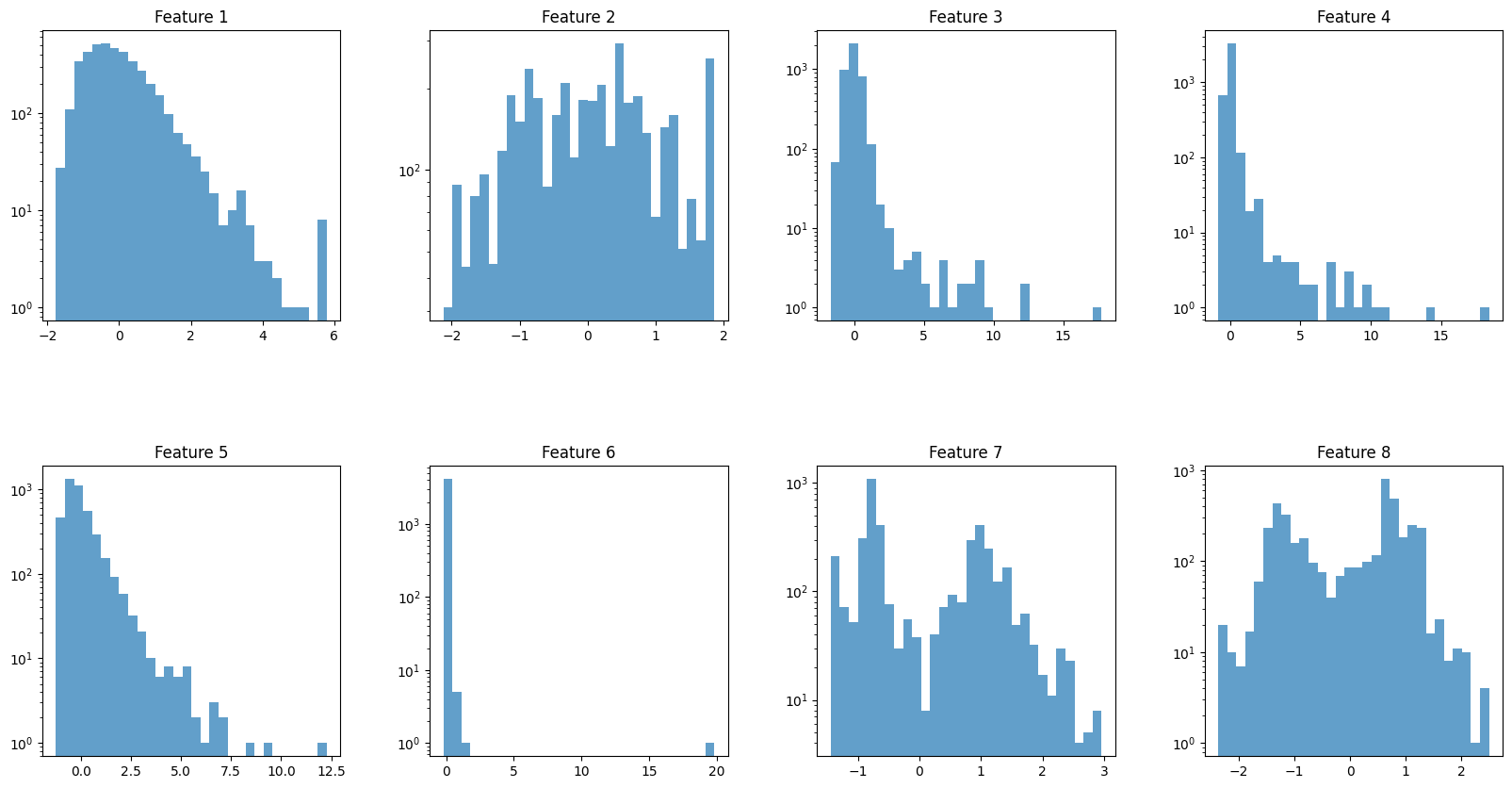

import matplotlib.pyplot as plt

# Assuming X_test is defined and has the shape (4128, 8)

# Set up the matplotlib figure

fig, axs = plt.subplots(nrows=2, ncols=4, figsize=(20, 10)) # Adjust size as needed

fig.subplots_adjust(hspace=0.5, wspace=0.3) # Adjust spacing between plots as needed

# Iterate over all features (columns) in X_test

for i in range(8):

# Determine the position of the subplot

row = i // 4

col = i % 4

ax = axs[row, col]

# Plot the histogram for the i-th feature

ax.hist(X_test_scaled[:, i], bins=30, alpha=0.7, log=True)

ax.set_title(f'Feature {i+1}')

# Display the plot

plt.show()

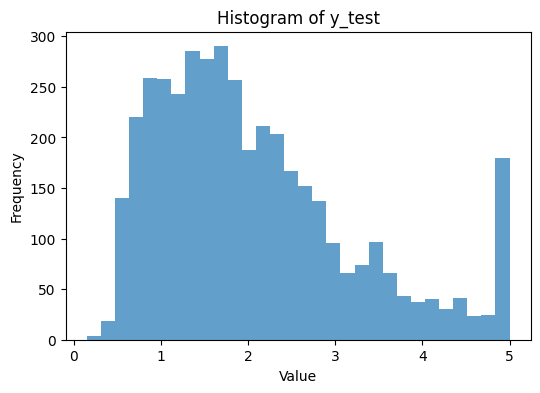

# Plotting the histogram for y_test

plt.figure(figsize=(6, 4)) # Adjust figure size as needed

plt.hist(y_test.ravel(), bins=30, alpha=0.7) # Use .ravel() to flatten y_test to 1D

plt.title('Histogram of y_test')

plt.xlabel('Value')

plt.ylabel('Frequency')

plt.show()

Linear Regression#

# Initialize and train a linear regression model on the scaled data

lin_reg = LinearRegression()

lin_reg.fit(X_train_scaled, y_train)

# Predict on the scaled training set and the scaled test set

y_train_pred = lin_reg.predict(X_train_scaled)

y_test_pred = lin_reg.predict(X_test_scaled)

# Evaluate the model

train_mse = mean_squared_error(y_train, y_train_pred)

test_mse = mean_squared_error(y_test, y_test_pred)

print(f'Training Mean Squared Error: {train_mse:.4f}')

print(f'Test Mean Squared Error: {test_mse:.4f}')

# Coefficients and Intercept

print('Intercept:', lin_reg.intercept_)

print('Coefficients:', lin_reg.coef_)

Training Mean Squared Error: 0.5179

Test Mean Squared Error: 0.5559

Intercept: 2.071946937378619

Coefficients: [ 0.85438303 0.12254624 -0.29441013 0.33925949 -0.00230772 -0.0408291

-0.89692888 -0.86984178]

# without scaling

#Training Mean Squared Error: 0.5179

#Test Mean Squared Error: 0.5559

#Intercept: -37.02327770606391

#Coefficients: [ 4.48674910e-01 9.72425752e-03 -1.23323343e-01 7.83144907e-01 -2.02962058e-06 -3.52631849e-03 -4.19792487e-01 -4.33708065e-01]

Bayesian Linear Regression with PyMC#

# Define the model

with pm.Model() as model_lin:

# Priors for unknown model parameters

alpha = pm.Normal('alpha', mu=0, sigma=10)

beta = pm.Normal('beta', mu=0, sigma=10, shape=X_train.shape[1])

sigma = pm.HalfNormal('sigma', sigma=1)

# Expected value of outcome

mu = alpha + pm.math.dot(X_train_scaled, beta)

# Likelihood (sampling distribution) of observations

Y_obs = pm.Normal('Y_obs', mu=mu, sigma=sigma, observed=y_train)

# Sample from the posterior

idata_lin = pm.sample(500, chains=1) # lengthy: use burn-in of 1000 and 1000 draws for 1 chain

100.00% [1500/1500 00:14<00:00 Sampling chain 0, 0 divergences]

import arviz as az

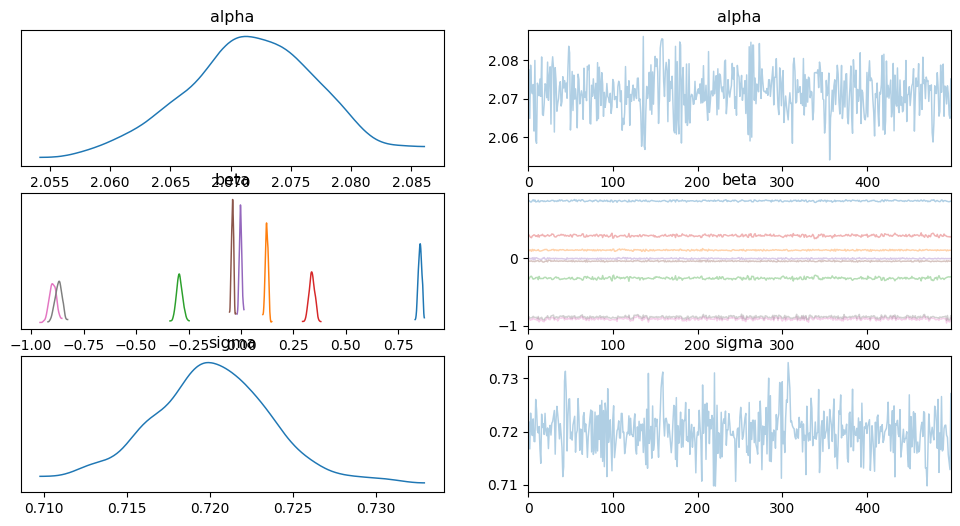

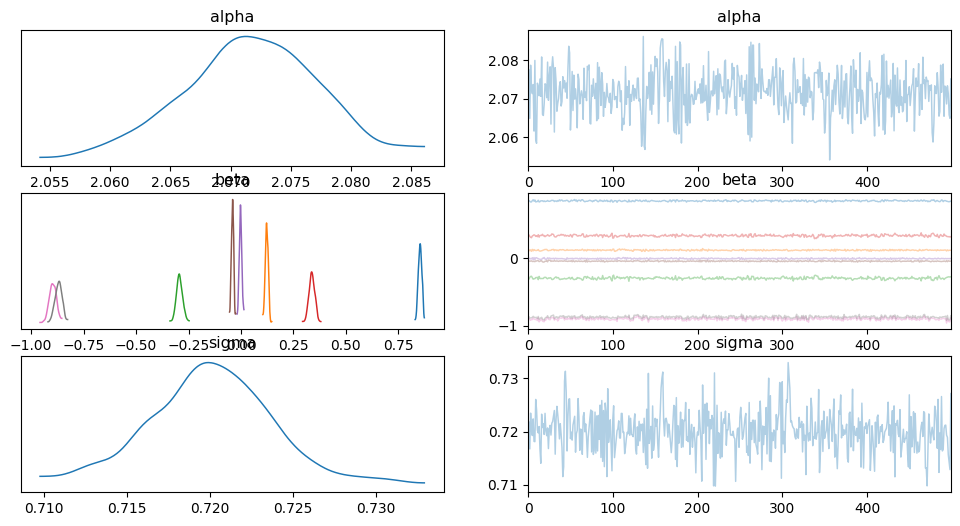

az.plot_trace(idata_lin)

array([[<Axes: title={'center': 'alpha'}>,

<Axes: title={'center': 'alpha'}>],

[<Axes: title={'center': 'beta'}>,

<Axes: title={'center': 'beta'}>],

[<Axes: title={'center': 'sigma'}>,

<Axes: title={'center': 'sigma'}>]], dtype=object)

pos_alpha = idata_lin.posterior['alpha'].mean(axis=0).values

pos_betas = idata_lin.posterior['beta'].mean(axis=0).values

#print(np.shape(pos_alpha))

print(np.shape(pos_betas))

#pos_alpha = pos_alpha.reshape((np.shape(pos_alpha)[1],))

#pos_betas = pos_betas.reshape((np.shape(pos_betas)[1],np.shape(pos_betas)[2]))

(500, 8)

(500,) (500, 8) (8, 4128)

<class 'numpy.ndarray'> <class 'numpy.ndarray'> <class 'numpy.ndarray'>

pos_y_test = pos_alpha[:, np.newaxis] + np.dot(pos_betas, X_test_scaled.T)

pos_y_train = pos_alpha[:, np.newaxis] + np.dot(pos_betas, X_train_scaled.T)

print(np.shape(pos_y_test), type(pos_y_test))

pos_y_test_mean = np.mean(pos_y_test, axis=0)

pos_y_train_mean = np.mean(pos_y_train, axis=0)

print(np.shape(pos_y_test_mean))

pos_y_test_lower = np.percentile(pos_y_test,3, axis=0)

pos_y_test_upper = np.percentile(pos_y_test,97, axis=0)

pos_y_train_lower = np.percentile(pos_y_train,3, axis=0)

pos_y_train_upper = np.percentile(pos_y_train,97, axis=0)

print(np.shape(pos_y_test_lower))

for i in range(len(y_test)):

if(i%50==0):

print(f"true: {y_test[i]:1.4f}, y_test_pred: {y_test_pred[i]:1.4f}, Bayes mean: {pos_y_test_mean[i]:1.4f}, range: ({pos_y_test_lower[i]:1.4f}, {pos_y_test_upper[i]:1.4f})")

(500, 4128) <class 'numpy.ndarray'>

(4128,)

(4128,)

true: 0.7100, y_test_pred: 0.7191, Bayes mean: 0.8282, range: (0.7996, 0.8556)

true: 1.8800, y_test_pred: 2.4037, Bayes mean: 2.5199, range: (2.5014, 2.5369)

true: 2.2940, y_test_pred: 1.5178, Bayes mean: 2.5583, range: (2.5374, 2.5781)

true: 1.5630, y_test_pred: 1.5097, Bayes mean: 2.1514, range: (2.1311, 2.1708)

true: 1.9640, y_test_pred: 2.3937, Bayes mean: 2.2789, range: (2.2600, 2.2955)

true: 0.7020, y_test_pred: 1.0502, Bayes mean: 0.6899, range: (0.6695, 0.7124)

true: 1.8310, y_test_pred: 0.6687, Bayes mean: 2.2953, range: (2.2688, 2.3216)

true: 1.1610, y_test_pred: 1.4168, Bayes mean: 1.5943, range: (1.5747, 1.6132)

true: 1.7710, y_test_pred: 2.5336, Bayes mean: 2.3466, range: (2.3202, 2.3735)

true: 4.3930, y_test_pred: 2.8634, Bayes mean: 2.7801, range: (2.7474, 2.8080)

true: 2.6050, y_test_pred: 2.2113, Bayes mean: 2.4015, range: (2.3789, 2.4223)

true: 2.9520, y_test_pred: 0.9937, Bayes mean: 2.1671, range: (2.1510, 2.1836)

true: 1.6770, y_test_pred: 1.4220, Bayes mean: 2.0871, range: (2.0717, 2.1032)

true: 2.3740, y_test_pred: 1.1735, Bayes mean: 3.4368, range: (3.4020, 3.4742)

true: 1.9840, y_test_pred: 1.9858, Bayes mean: 2.3951, range: (2.3671, 2.4208)

true: 1.6660, y_test_pred: 0.7142, Bayes mean: 1.6425, range: (1.6200, 1.6645)

true: 1.1110, y_test_pred: 0.9356, Bayes mean: 0.8779, range: (0.8339, 0.9273)

true: 5.0000, y_test_pred: 1.5730, Bayes mean: 2.9698, range: (2.9380, 2.9988)

true: 0.2750, y_test_pred: 2.6333, Bayes mean: -0.3888, range: (-0.4442, -0.3366)

true: 2.2540, y_test_pred: 3.3560, Bayes mean: 1.1468, range: (1.1229, 1.1718)

true: 1.8750, y_test_pred: 2.0999, Bayes mean: 1.6768, range: (1.6614, 1.6945)

true: 3.6400, y_test_pred: 1.8346, Bayes mean: 2.4500, range: (2.4240, 2.4795)

true: 5.0000, y_test_pred: 1.1454, Bayes mean: 4.0948, range: (4.0632, 4.1243)

true: 4.4290, y_test_pred: 4.8032, Bayes mean: 2.3340, range: (2.3027, 2.3668)

true: 2.0700, y_test_pred: 1.5665, Bayes mean: 1.8228, range: (1.8042, 1.8443)

true: 0.8760, y_test_pred: 4.2362, Bayes mean: 1.7924, range: (1.7595, 1.8254)

true: 0.9800, y_test_pred: 2.0385, Bayes mean: 1.8739, range: (1.8551, 1.8937)

true: 3.9300, y_test_pred: 1.5520, Bayes mean: 3.5645, range: (3.5330, 3.5971)

true: 0.7970, y_test_pred: 1.2078, Bayes mean: 1.0687, range: (1.0462, 1.0900)

true: 1.6900, y_test_pred: 1.6035, Bayes mean: 2.0719, range: (2.0553, 2.0884)

true: 2.2020, y_test_pred: 1.9321, Bayes mean: 2.8197, range: (2.7981, 2.8393)

true: 2.4560, y_test_pred: 1.6540, Bayes mean: 2.2592, range: (2.1960, 2.3228)

true: 1.4000, y_test_pred: 2.5838, Bayes mean: 0.6081, range: (0.5811, 0.6368)

true: 1.9100, y_test_pred: 2.4022, Bayes mean: 2.1911, range: (2.1763, 2.2058)

true: 2.1740, y_test_pred: 2.6450, Bayes mean: 2.6469, range: (2.6290, 2.6651)

true: 1.6050, y_test_pred: 2.9621, Bayes mean: 2.3035, range: (2.2867, 2.3214)

true: 0.4550, y_test_pred: 2.6647, Bayes mean: 0.4170, range: (0.3904, 0.4454)

true: 1.0160, y_test_pred: 2.1881, Bayes mean: 1.3898, range: (1.3684, 1.4115)

true: 1.9220, y_test_pred: 2.2026, Bayes mean: 2.5246, range: (2.4956, 2.5541)

true: 1.8580, y_test_pred: 2.7611, Bayes mean: 2.0160, range: (1.9812, 2.0522)

true: 2.5220, y_test_pred: 2.0046, Bayes mean: 2.0188, range: (1.9890, 2.0467)

true: 5.0000, y_test_pred: 3.1471, Bayes mean: 2.5501, range: (2.5269, 2.5721)

true: 2.7860, y_test_pred: 0.9452, Bayes mean: 2.4931, range: (2.4648, 2.5211)

true: 1.9140, y_test_pred: 1.0731, Bayes mean: 2.3644, range: (2.3502, 2.3780)

true: 2.3310, y_test_pred: 2.0241, Bayes mean: 2.1435, range: (2.1238, 2.1625)

true: 1.0360, y_test_pred: 2.4118, Bayes mean: 1.7932, range: (1.7759, 1.8109)

true: 0.9920, y_test_pred: 1.6802, Bayes mean: 1.1980, range: (1.1633, 1.2338)

true: 1.8840, y_test_pred: 3.1414, Bayes mean: 1.9298, range: (1.9040, 1.9579)

true: 1.7100, y_test_pred: 1.4824, Bayes mean: 2.1509, range: (2.1310, 2.1700)

true: 2.5080, y_test_pred: 2.2120, Bayes mean: 3.3363, range: (3.3029, 3.3652)

true: 1.5740, y_test_pred: 1.2696, Bayes mean: 2.3000, range: (2.2859, 2.3141)

true: 5.0000, y_test_pred: 2.3738, Bayes mean: 4.3889, range: (4.3570, 4.4205)

true: 0.8750, y_test_pred: 2.7551, Bayes mean: 1.2609, range: (1.2242, 1.2969)

true: 0.4660, y_test_pred: 1.7705, Bayes mean: 0.8677, range: (0.8392, 0.8951)

true: 1.9830, y_test_pred: 3.1743, Bayes mean: 1.4168, range: (1.3683, 1.4679)

true: 0.4390, y_test_pred: 1.7385, Bayes mean: 0.8814, range: (0.8499, 0.9138)

true: 1.9090, y_test_pred: 2.1866, Bayes mean: 1.9746, range: (1.9516, 1.9974)

true: 0.6750, y_test_pred: 1.6092, Bayes mean: 0.9138, range: (0.8939, 0.9363)

true: 1.3130, y_test_pred: 2.7028, Bayes mean: 2.0743, range: (2.0456, 2.1044)

true: 3.9000, y_test_pred: 2.3851, Bayes mean: 3.3703, range: (3.3418, 3.3979)

true: 1.5280, y_test_pred: 2.0705, Bayes mean: 1.7734, range: (1.7571, 1.7923)

true: 2.3280, y_test_pred: 1.7752, Bayes mean: 2.3420, range: (2.3203, 2.3645)

true: 2.4480, y_test_pred: 2.2463, Bayes mean: 2.3642, range: (2.3416, 2.3861)

true: 2.3330, y_test_pred: 1.4475, Bayes mean: 2.3559, range: (2.3381, 2.3740)

true: 3.7000, y_test_pred: 2.4799, Bayes mean: 2.5330, range: (2.5048, 2.5611)

true: 1.6230, y_test_pred: 1.9872, Bayes mean: 2.3838, range: (2.3631, 2.4051)

true: 1.3490, y_test_pred: 2.5150, Bayes mean: 1.7489, range: (1.7024, 1.7955)

true: 0.5750, y_test_pred: 2.8794, Bayes mean: -0.2778, range: (-0.3344, -0.2209)

true: 3.3240, y_test_pred: 1.6917, Bayes mean: 3.2900, range: (3.2610, 3.3192)

true: 2.2500, y_test_pred: 1.5525, Bayes mean: 2.4180, range: (2.3937, 2.4432)

true: 1.7800, y_test_pred: 4.6456, Bayes mean: 1.8437, range: (1.8193, 1.8682)

true: 1.6650, y_test_pred: 2.3504, Bayes mean: 2.0658, range: (2.0288, 2.1008)

true: 1.5820, y_test_pred: 1.7014, Bayes mean: 2.5318, range: (2.5130, 2.5504)

true: 4.1610, y_test_pred: 1.9083, Bayes mean: 2.3244, range: (2.3057, 2.3425)

true: 5.0000, y_test_pred: 1.9192, Bayes mean: 3.4275, range: (3.4025, 3.4504)

true: 1.4750, y_test_pred: 1.6407, Bayes mean: 2.0675, range: (2.0492, 2.0857)

true: 1.0830, y_test_pred: 1.7796, Bayes mean: 2.2980, range: (2.2721, 2.3258)

true: 4.5000, y_test_pred: 3.0174, Bayes mean: 1.3462, range: (1.3035, 1.3865)

true: 3.9770, y_test_pred: 1.6099, Bayes mean: 3.8453, range: (3.8079, 3.8849)

true: 1.5040, y_test_pred: 3.2436, Bayes mean: 2.1676, range: (2.1168, 2.2205)

true: 2.3800, y_test_pred: 2.6613, Bayes mean: 3.0320, range: (3.0101, 3.0539)

true: 1.7810, y_test_pred: 1.6718, Bayes mean: 2.1605, range: (2.1338, 2.1861)

true: 2.0880, y_test_pred: 1.1509, Bayes mean: 1.5704, range: (1.5461, 1.5969)

#--- comparing Linear Regression with Bayesian Linear Regression

print("LINEAR REGRESSION")

print(f'Training Mean Squared Error: {train_mse:.4f}')

print(f'Test Mean Squared Error: {test_mse:.4f}')

print("\n")

# Evaluate the model

train_mse_bayes = mean_squared_error(y_train, pos_y_train_mean)

test_mse_bayes = mean_squared_error(y_test, pos_y_test_mean)

print("BAYESIAN LINEAR REGRESSION")

print(f'Training Mean Squared Error: {train_mse_bayes:.4f}')

print(f'Test Mean Squared Error: {test_mse_bayes:.4f}')

LINEAR REGRESSION

Training Mean Squared Error: 0.5179

Test Mean Squared Error: 0.5559

BAYESIAN LINEAR REGRESSION

Training Mean Squared Error: 0.5212

Test Mean Squared Error: 0.5418

az.plot_trace(idata_lin)

array([[<Axes: title={'center': 'alpha'}>,

<Axes: title={'center': 'alpha'}>],

[<Axes: title={'center': 'beta'}>,

<Axes: title={'center': 'beta'}>],

[<Axes: title={'center': 'sigma'}>,

<Axes: title={'center': 'sigma'}>]], dtype=object)

az.summary(idata_pol)

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| alpha | 1.930 | 0.005 | 1.922 | 1.940 | 0.000 | 0.000 | 1249.0 | 839.0 | 1.00 |

| beta[0] | 0.886 | 0.009 | 0.870 | 0.902 | 0.000 | 0.000 | 659.0 | 739.0 | 1.00 |

| beta[1] | 0.113 | 0.005 | 0.102 | 0.122 | 0.000 | 0.000 | 1011.0 | 610.0 | 1.00 |

| beta[2] | -0.337 | 0.017 | -0.368 | -0.305 | 0.001 | 0.000 | 616.0 | 697.0 | 1.01 |

| beta[3] | 0.400 | 0.017 | 0.367 | 0.433 | 0.001 | 0.000 | 732.0 | 417.0 | 1.01 |

| beta[4] | 0.036 | 0.004 | 0.028 | 0.044 | 0.000 | 0.000 | 1289.0 | 741.0 | 1.01 |

| beta[5] | -2.814 | 0.077 | -2.944 | -2.649 | 0.002 | 0.001 | 1319.0 | 572.0 | 1.01 |

| beta[6] | -0.773 | 0.014 | -0.796 | -0.745 | 0.001 | 0.000 | 598.0 | 754.0 | 1.00 |

| beta[7] | -0.730 | 0.013 | -0.757 | -0.706 | 0.001 | 0.000 | 620.0 | 736.0 | 1.00 |

| sigma | 0.448 | 0.005 | 0.439 | 0.458 | 0.000 | 0.000 | 720.0 | 647.0 | 1.01 |

| ν_ | 2.977 | 0.079 | 2.827 | 3.116 | 0.003 | 0.002 | 735.0 | 681.0 | 1.01 |

| ν | 2.977 | 0.079 | 2.827 | 3.116 | 0.003 | 0.002 | 735.0 | 681.0 | 1.01 |

Polynomial Linear regression#

# Define the model

with pm.Model() as model_pol:

# Priors for unknown model parameters

alpha = pm.Normal('alpha', mu=0, sigma=10)

beta = pm.Normal('beta', mu=0, sigma=10, shape=X_train.shape[1])

gamma = pm.Normal('gamma', mu=0, sigma=10, shape=X_train.shape[1])

sigma = pm.HalfNormal('sigma', sigma=1)

ν_ = pm.Exponential('ν_', 1/29)

ν = pm.Deterministic('ν', ν_ + 1)

# Expected value of outcome

mu = alpha + pm.math.dot(X_train_scaled, beta) + pm.math.dot(X_train_scaled**2, gamma)

# Likelihood (sampling distribution) of observations

#Y_obs = pm.Normal('Y_obs', mu=mu, sigma=sigma, observed=y_train)

Y_obs = pm.StudentT('Y_obs', mu=mu, sigma=sigma, nu=ν, observed=y_train) #ϵ

# Sample from the posterior

idata_pol = pm.sample(500, chains=2) # lengthy: use burn-in of 1000 and 1000 draws for 1 chain

100.00% [1500/1500 04:58<00:00 Sampling chain 0, 0 divergences]

100.00% [1500/1500 05:40<00:00 Sampling chain 1, 0 divergences]

az.summary(idata_pol)

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| alpha | 1.943 | 0.011 | 1.922 | 1.963 | 0.004 | 0.003 | 8.0 | 479.0 | 1.20 |

| beta[0] | 0.868 | 0.013 | 0.844 | 0.891 | 0.005 | 0.004 | 6.0 | 189.0 | 1.30 |

| beta[1] | 0.110 | 0.005 | 0.100 | 0.120 | 0.000 | 0.000 | 193.0 | 633.0 | 1.03 |

| beta[2] | -0.330 | 0.024 | -0.373 | -0.285 | 0.013 | 0.010 | 4.0 | 271.0 | 1.54 |

| beta[3] | 0.480 | 0.030 | 0.429 | 0.532 | 0.017 | 0.013 | 4.0 | 91.0 | 1.61 |

| beta[4] | 0.052 | 0.008 | 0.038 | 0.067 | 0.003 | 0.002 | 6.0 | 202.0 | 1.26 |

| beta[5] | -1.768 | 0.167 | -1.993 | -1.540 | 0.114 | 0.095 | 3.0 | 221.0 | 1.83 |

| beta[6] | -0.870 | 0.017 | -0.903 | -0.840 | 0.001 | 0.001 | 352.0 | 508.0 | 1.01 |

| beta[7] | -0.824 | 0.016 | -0.854 | -0.796 | 0.001 | 0.001 | 364.0 | 529.0 | 1.01 |

| gamma[0] | 0.007 | 0.004 | 0.000 | 0.015 | 0.001 | 0.001 | 25.0 | 444.0 | 1.06 |

| gamma[1] | 0.002 | 0.005 | -0.007 | 0.011 | 0.001 | 0.001 | 11.0 | 194.0 | 1.14 |

| gamma[2] | 0.029 | 0.004 | 0.023 | 0.036 | 0.001 | 0.001 | 8.0 | 325.0 | 1.19 |

| gamma[3] | -0.035 | 0.004 | -0.042 | -0.026 | 0.002 | 0.001 | 8.0 | 318.0 | 1.20 |

| gamma[4] | -0.003 | 0.001 | -0.005 | -0.001 | 0.000 | 0.000 | 18.0 | 249.0 | 1.08 |

| gamma[5] | 0.639 | 0.628 | 0.016 | 1.399 | 0.437 | 0.368 | 3.0 | 125.0 | 1.83 |

| gamma[6] | 0.066 | 0.007 | 0.054 | 0.078 | 0.000 | 0.000 | 492.0 | 604.0 | 1.02 |

| gamma[7] | -0.080 | 0.008 | -0.095 | -0.066 | 0.001 | 0.000 | 118.0 | 586.0 | 1.04 |

| sigma | 0.437 | 0.006 | 0.426 | 0.447 | 0.002 | 0.002 | 7.0 | 198.0 | 1.22 |

| ν_ | 1.916 | 0.086 | 1.759 | 2.079 | 0.021 | 0.015 | 17.0 | 435.0 | 1.09 |

| ν | 2.916 | 0.086 | 2.759 | 3.079 | 0.021 | 0.015 | 17.0 | 435.0 | 1.09 |

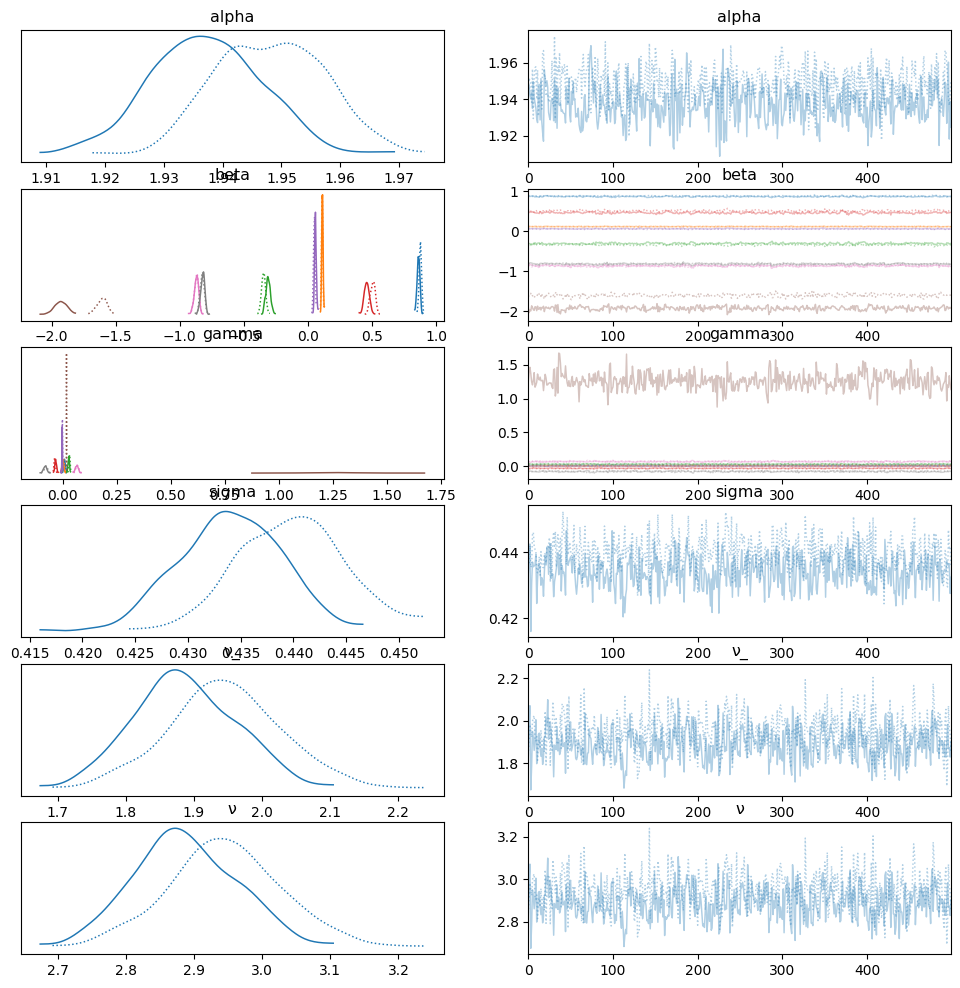

az.plot_trace(idata_pol, compact=True)

array([[<Axes: title={'center': 'alpha'}>,

<Axes: title={'center': 'alpha'}>],

[<Axes: title={'center': 'beta'}>,

<Axes: title={'center': 'beta'}>],

[<Axes: title={'center': 'gamma'}>,

<Axes: title={'center': 'gamma'}>],

[<Axes: title={'center': 'sigma'}>,

<Axes: title={'center': 'sigma'}>],

[<Axes: title={'center': 'ν_'}>, <Axes: title={'center': 'ν_'}>],

[<Axes: title={'center': 'ν'}>, <Axes: title={'center': 'ν'}>]],

dtype=object)

pos_alpha_pol = idata_pol.posterior['alpha'].mean(axis=0).values

pos_betas_pol = idata_pol.posterior['beta'].mean(axis=0).values

pos_gammas_pol = idata_pol.posterior['gamma'].mean(axis=0).values

pos_y_test_pol = pos_alpha_pol[:, np.newaxis] + np.dot(pos_betas_pol, X_test_scaled.T) + np.dot(pos_gammas_pol, (X_test_scaled**2).T)

pos_y_test_mean_pol = np.mean(pos_y_test_pol, axis=0)

pos_y_train_pol = pos_alpha_pol[:, np.newaxis] + np.dot(pos_betas_pol, X_train_scaled.T) + np.dot(pos_gammas_pol, (X_train_scaled**2).T)

pos_y_train_mean_pol = np.mean(pos_y_train_pol, axis=0)

pos_y_test_lower_pol = np.percentile(pos_y_test_pol,3, axis=0)

pos_y_test_upper_pol = np.percentile(pos_y_test_pol,97, axis=0)

pos_y_train_lower_pol = np.percentile(pos_y_train_pol,3, axis=0)

pos_y_train_upper_pol = np.percentile(pos_y_train_pol,97, axis=0)

for i in range(len(y_test)):

if(i%50==0):

print(f"true: {y_test[i]:1.4f}, y_test_pred: {y_test_pred[i]:1.4f}, Bayes mean: {pos_y_test_mean_pol[i]:1.4f}, range: ({pos_y_test_lower_pol[i]:1.4f}, {pos_y_test_upper_pol[i]:1.4f})")

true: 0.7100, y_test_pred: 0.7191, Bayes mean: 0.8540, range: (0.8388, 0.8722)

true: 1.8800, y_test_pred: 2.4037, Bayes mean: 2.0451, range: (2.0291, 2.0622)

true: 2.2940, y_test_pred: 1.5178, Bayes mean: 2.3091, range: (2.2954, 2.3226)

true: 1.5630, y_test_pred: 1.5097, Bayes mean: 2.0410, range: (2.0267, 2.0553)

true: 1.9640, y_test_pred: 2.3937, Bayes mean: 2.3225, range: (2.3085, 2.3389)

true: 0.7020, y_test_pred: 1.0502, Bayes mean: 0.7358, range: (0.7131, 0.7576)

true: 1.8310, y_test_pred: 0.6687, Bayes mean: 1.9189, range: (1.9022, 1.9385)

true: 1.1610, y_test_pred: 1.4168, Bayes mean: 1.5029, range: (1.4898, 1.5159)

true: 1.7710, y_test_pred: 2.5336, Bayes mean: 2.2480, range: (2.2301, 2.2658)

true: 4.3930, y_test_pred: 2.8634, Bayes mean: 3.0842, range: (3.0581, 3.1112)

true: 2.6050, y_test_pred: 2.2113, Bayes mean: 2.2908, range: (2.2762, 2.3061)

true: 2.9520, y_test_pred: 0.9937, Bayes mean: 2.3018, range: (2.2846, 2.3199)

true: 1.6770, y_test_pred: 1.4220, Bayes mean: 1.8248, range: (1.8134, 1.8361)

true: 2.3740, y_test_pred: 1.1735, Bayes mean: 3.4531, range: (3.4253, 3.4830)

true: 1.9840, y_test_pred: 1.9858, Bayes mean: 2.6404, range: (2.6144, 2.6696)

true: 1.6660, y_test_pred: 0.7142, Bayes mean: 1.3694, range: (1.3554, 1.3853)

true: 1.1110, y_test_pred: 0.9356, Bayes mean: 1.1600, range: (1.1228, 1.1951)

true: 5.0000, y_test_pred: 1.5730, Bayes mean: 3.0222, range: (2.9955, 3.0486)

true: 0.2750, y_test_pred: 2.6333, Bayes mean: -0.5147, range: (-0.5928, -0.4481)

true: 2.2540, y_test_pred: 3.3560, Bayes mean: 1.3710, range: (1.3464, 1.3942)

true: 1.8750, y_test_pred: 2.0999, Bayes mean: 1.3067, range: (1.2926, 1.3196)

true: 3.6400, y_test_pred: 1.8346, Bayes mean: 2.5451, range: (2.5224, 2.5701)

true: 5.0000, y_test_pred: 1.1454, Bayes mean: 4.0484, range: (4.0255, 4.0739)

true: 4.4290, y_test_pred: 4.8032, Bayes mean: 2.3408, range: (2.3165, 2.3672)

true: 2.0700, y_test_pred: 1.5665, Bayes mean: 1.5708, range: (1.5576, 1.5822)

true: 0.8760, y_test_pred: 4.2362, Bayes mean: 1.8450, range: (1.8214, 1.8696)

true: 0.9800, y_test_pred: 2.0385, Bayes mean: 1.5912, range: (1.5802, 1.6022)

true: 3.9300, y_test_pred: 1.5520, Bayes mean: 3.5345, range: (3.5136, 3.5538)

true: 0.7970, y_test_pred: 1.2078, Bayes mean: 1.0205, range: (1.0037, 1.0372)

true: 1.6900, y_test_pred: 1.6035, Bayes mean: 1.6370, range: (1.6257, 1.6486)

true: 2.2020, y_test_pred: 1.9321, Bayes mean: 2.5729, range: (2.5561, 2.5893)

true: 2.4560, y_test_pred: 1.6540, Bayes mean: 2.3260, range: (2.2929, 2.3588)

true: 1.4000, y_test_pred: 2.5838, Bayes mean: 0.7366, range: (0.7090, 0.7600)

true: 1.9100, y_test_pred: 2.4022, Bayes mean: 2.2168, range: (2.2048, 2.2301)

true: 2.1740, y_test_pred: 2.6450, Bayes mean: 2.1959, range: (2.1798, 2.2119)

true: 1.6050, y_test_pred: 2.9621, Bayes mean: 2.3587, range: (2.3457, 2.3722)

true: 0.4550, y_test_pred: 2.6647, Bayes mean: 0.4610, range: (0.4433, 0.4789)

true: 1.0160, y_test_pred: 2.1881, Bayes mean: 1.4007, range: (1.3885, 1.4143)

true: 1.9220, y_test_pred: 2.2026, Bayes mean: 2.6259, range: (2.6045, 2.6484)

true: 1.8580, y_test_pred: 2.7611, Bayes mean: 1.8910, range: (1.8713, 1.9131)

true: 2.5220, y_test_pred: 2.0046, Bayes mean: 2.1495, range: (2.1294, 2.1696)

true: 5.0000, y_test_pred: 3.1471, Bayes mean: 2.6115, range: (2.5935, 2.6301)

true: 2.7860, y_test_pred: 0.9452, Bayes mean: 2.6628, range: (2.6410, 2.6866)

true: 1.9140, y_test_pred: 1.0731, Bayes mean: 2.0632, range: (2.0520, 2.0745)

true: 2.3310, y_test_pred: 2.0241, Bayes mean: 2.1976, range: (2.1825, 2.2152)

true: 1.0360, y_test_pred: 2.4118, Bayes mean: 1.3813, range: (1.3706, 1.3934)

true: 0.9920, y_test_pred: 1.6802, Bayes mean: 1.1199, range: (1.0816, 1.1583)

true: 1.8840, y_test_pred: 3.1414, Bayes mean: 2.0106, range: (1.9924, 2.0292)

true: 1.7100, y_test_pred: 1.4824, Bayes mean: 2.1493, range: (2.1320, 2.1675)

true: 2.5080, y_test_pred: 2.2120, Bayes mean: 3.0535, range: (3.0279, 3.0807)

true: 1.5740, y_test_pred: 1.2696, Bayes mean: 2.0070, range: (1.9961, 2.0185)

true: 5.0000, y_test_pred: 2.3738, Bayes mean: 4.3064, range: (4.2808, 4.3301)

true: 0.8750, y_test_pred: 2.7551, Bayes mean: 1.6257, range: (1.5975, 1.6536)

true: 0.4660, y_test_pred: 1.7705, Bayes mean: 0.4762, range: (0.4573, 0.4954)

true: 1.9830, y_test_pred: 3.1743, Bayes mean: 1.3224, range: (1.2957, 1.3498)

true: 0.4390, y_test_pred: 1.7385, Bayes mean: 0.9923, range: (0.9595, 1.0260)

true: 1.9090, y_test_pred: 2.1866, Bayes mean: 2.0339, range: (2.0166, 2.0522)

true: 0.6750, y_test_pred: 1.6092, Bayes mean: 1.0142, range: (0.9944, 1.0345)

true: 1.3130, y_test_pred: 2.7028, Bayes mean: 1.7823, range: (1.7528, 1.8130)

true: 3.9000, y_test_pred: 2.3851, Bayes mean: 3.0825, range: (3.0640, 3.1012)

true: 1.5280, y_test_pred: 2.0705, Bayes mean: 1.0998, range: (1.0784, 1.1206)

true: 2.3280, y_test_pred: 1.7752, Bayes mean: 2.1859, range: (2.1713, 2.2014)

true: 2.4480, y_test_pred: 2.2463, Bayes mean: 2.4089, range: (2.3949, 2.4240)

true: 2.3330, y_test_pred: 1.4475, Bayes mean: 2.1611, range: (2.1481, 2.1735)

true: 3.7000, y_test_pred: 2.4799, Bayes mean: 2.5793, range: (2.5536, 2.6039)

true: 1.6230, y_test_pred: 1.9872, Bayes mean: 2.4077, range: (2.3896, 2.4262)

true: 1.3490, y_test_pred: 2.5150, Bayes mean: 1.5193, range: (1.4931, 1.5476)

true: 0.5750, y_test_pred: 2.8794, Bayes mean: 0.1773, range: (0.1321, 0.2225)

true: 3.3240, y_test_pred: 1.6917, Bayes mean: 3.0592, range: (3.0410, 3.0769)

true: 2.2500, y_test_pred: 1.5525, Bayes mean: 2.4350, range: (2.4156, 2.4551)

true: 1.7800, y_test_pred: 4.6456, Bayes mean: 1.3513, range: (1.3320, 1.3701)

true: 1.6650, y_test_pred: 2.3504, Bayes mean: 2.1178, range: (2.0840, 2.1518)

true: 1.5820, y_test_pred: 1.7014, Bayes mean: 2.1128, range: (2.0980, 2.1287)

true: 4.1610, y_test_pred: 1.9083, Bayes mean: 2.1967, range: (2.1834, 2.2114)

true: 5.0000, y_test_pred: 1.9192, Bayes mean: 3.4709, range: (3.4533, 3.4893)

true: 1.4750, y_test_pred: 1.6407, Bayes mean: 1.6578, range: (1.6418, 1.6741)

true: 1.0830, y_test_pred: 1.7796, Bayes mean: 2.0512, range: (2.0325, 2.0719)

true: 4.5000, y_test_pred: 3.0174, Bayes mean: 1.7865, range: (1.7490, 1.8230)

true: 3.9770, y_test_pred: 1.6099, Bayes mean: 3.8891, range: (3.8598, 3.9218)

true: 1.5040, y_test_pred: 3.2436, Bayes mean: 2.1547, range: (2.1249, 2.1854)

true: 2.3800, y_test_pred: 2.6613, Bayes mean: 2.6496, range: (2.6309, 2.6693)

true: 1.7810, y_test_pred: 1.6718, Bayes mean: 1.9610, range: (1.9419, 1.9828)

true: 2.0880, y_test_pred: 1.1509, Bayes mean: 1.4923, range: (1.4754, 1.5084)

#--- comparing Linear Regression with Polynomial Bayesian Linear Regression

print("LINEAR REGRESSION")

print(f'Training Mean Squared Error: {train_mse:.4f}')

print(f'Test Mean Squared Error: {test_mse:.4f}')

print("\n")

# Evaluate the model

train_mse_bayes_rob = mean_squared_error(y_train, pos_y_train_mean_rob)

test_mse_bayes_rob = mean_squared_error(y_test, pos_y_test_mean_rob)

print("BAYESIAN LINEAR REGRESSION")

print(f'Training Mean Squared Error: {train_mse_bayes:.4f}')

print(f'Test Mean Squared Error: {test_mse_bayes:.4f}')

print("\n")

print("BAYESIAN POLYNOMIAL REGRESSION")

print(f'Training Mean Squared Error: {train_mse_bayes_rob:.4f}')

print(f'Test Mean Squared Error: {test_mse_bayes_rob:.4f}')

LINEAR REGRESSION

Training Mean Squared Error: 0.5179

Test Mean Squared Error: 0.5559

BAYESIAN LINEAR REGRESSION

Training Mean Squared Error: 0.5212

Test Mean Squared Error: 0.5418

BAYESIAN POLYNOMIAL REGRESSION

Training Mean Squared Error: 0.5231

Test Mean Squared Error: 0.5892

Model Comparison#

#---------- Posterior Predictive Checks

y_l = pm.sample_posterior_predictive(idata_lin,model=model_lin)

100.00% [500/500 00:00<00:00]

y_p = pm.sample_posterior_predictive(idata_pol,model=model_pol)

100.00% [1000/1000 00:02<00:00]

y_l

arviz.InferenceData

-

<xarray.Dataset> Dimensions: (chain: 1, draw: 500, Y_obs_dim_2: 16512) Coordinates: * chain (chain) int64 0 * draw (draw) int64 0 1 2 3 4 5 6 7 ... 493 494 495 496 497 498 499 * Y_obs_dim_2 (Y_obs_dim_2) int64 0 1 2 3 4 ... 16507 16508 16509 16510 16511 Data variables: Y_obs (chain, draw, Y_obs_dim_2) float64 2.126 3.056 ... 3.535 2.128 Attributes: created_at: 2024-03-10T00:10:15.997634 arviz_version: 0.15.1 inference_library: pymc inference_library_version: 5.10.4 -

<xarray.Dataset> Dimensions: (Y_obs_dim_0: 16512) Coordinates: * Y_obs_dim_0 (Y_obs_dim_0) int64 0 1 2 3 4 ... 16507 16508 16509 16510 16511 Data variables: Y_obs (Y_obs_dim_0) float64 1.03 3.821 1.726 ... 2.221 2.835 3.25 Attributes: created_at: 2024-03-10T00:10:15.999430 arviz_version: 0.15.1 inference_library: pymc inference_library_version: 5.10.4

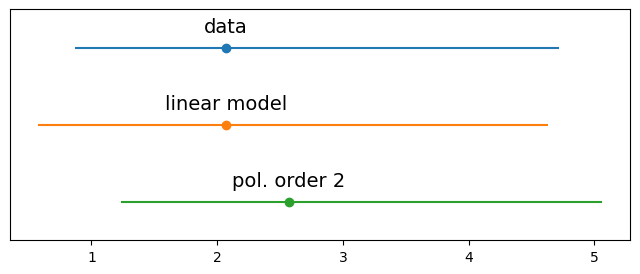

plt.figure(figsize=(8,3))

y_o = y_train

y_l = y_l.posterior_predictive['Y_obs'].mean(axis=0).mean(axis=0).values

y_p = y_p.posterior_predictive['y_pred'].mean(axis=0).mean(axis=0).values

data = [y_o, y_l, y_p]

labels = ['data', 'linear model', 'pol. order 2']

for i, d in enumerate(data):

mean = d.mean()

err = np.percentile(d, [25,75])

plt.errorbar(mean,-i,xerr=[[err[0]],[err[1]]], fmt='o')

plt.text(mean,-i+0.2, labels[i], ha='center', fontsize=14)

plt.ylim([-i-0.5,0.5])

plt.yticks([])

([], [])

#---------- Information Criteria

with model_lin:

pm.compute_log_likelihood(idata_lin)

with model_pol:

pm.compute_log_likelihood(idata_pol)

100.00% [1000/1000 00:01<00:00]

idata_pol

arviz.InferenceData

-

<xarray.Dataset> Dimensions: (chain: 2, draw: 500, beta_dim_0: 8, gamma_dim_0: 8) Coordinates: * chain (chain) int64 0 1 * draw (draw) int64 0 1 2 3 4 5 6 7 ... 493 494 495 496 497 498 499 * beta_dim_0 (beta_dim_0) int64 0 1 2 3 4 5 6 7 * gamma_dim_0 (gamma_dim_0) int64 0 1 2 3 4 5 6 7 Data variables: alpha (chain, draw) float64 1.942 1.948 1.942 ... 1.937 1.942 1.95 beta (chain, draw, beta_dim_0) float64 0.8741 0.1163 ... -0.8466 gamma (chain, draw, gamma_dim_0) float64 0.005186 ... -0.08653 sigma (chain, draw) float64 0.4335 0.4367 0.4426 ... 0.4418 0.4355 ν_ (chain, draw) float64 1.823 2.003 2.07 ... 1.966 1.942 1.847 ν (chain, draw) float64 2.823 3.003 3.07 ... 2.966 2.942 2.847 Attributes: created_at: 2024-03-10T00:08:27.922198 arviz_version: 0.15.1 inference_library: pymc inference_library_version: 5.10.4 sampling_time: 638.5446758270264 tuning_steps: 1000 -

<xarray.Dataset> Dimensions: (chain: 2, draw: 500) Coordinates: * chain (chain) int64 0 1 * draw (draw) int64 0 1 2 3 4 5 ... 494 495 496 497 498 499 Data variables: (12/17) process_time_diff (chain, draw) float64 0.173 0.1644 ... 0.2309 0.2423 n_steps (chain, draw) float64 127.0 127.0 ... 127.0 127.0 diverging (chain, draw) bool False False False ... False False largest_eigval (chain, draw) float64 nan nan nan nan ... nan nan nan energy (chain, draw) float64 1.581e+04 ... 1.59e+04 max_energy_error (chain, draw) float64 -0.2077 5.983 ... -0.3784 ... ... acceptance_rate (chain, draw) float64 0.9707 0.6147 ... 0.9992 0.9931 step_size (chain, draw) float64 0.0274 0.0274 ... 0.03957 step_size_bar (chain, draw) float64 0.03393 0.03393 ... 0.03759 reached_max_treedepth (chain, draw) bool False False False ... False False smallest_eigval (chain, draw) float64 nan nan nan nan ... nan nan nan perf_counter_start (chain, draw) float64 1.221e+04 ... 1.264e+04 Attributes: created_at: 2024-03-10T00:08:27.941327 arviz_version: 0.15.1 inference_library: pymc inference_library_version: 5.10.4 sampling_time: 638.5446758270264 tuning_steps: 1000 -

<xarray.Dataset> Dimensions: (y_pred_dim_0: 16512) Coordinates: * y_pred_dim_0 (y_pred_dim_0) int64 0 1 2 3 4 ... 16508 16509 16510 16511 Data variables: y_pred (y_pred_dim_0) float64 1.33 0.375 0.613 ... 2.641 4.869 2.826 Attributes: created_at: 2024-03-10T00:08:27.950929 arviz_version: 0.15.1 inference_library: pymc inference_library_version: 5.10.4

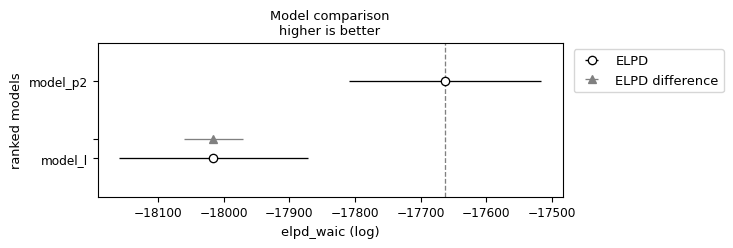

df_compare = az.compare({"model_l": idata_lin, "model_p2": idata_pol}, ic='waic') #loo is recommended

df_compare

/usr/local/lib/python3.10/dist-packages/arviz/stats/stats.py:1645: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(

/usr/local/lib/python3.10/dist-packages/arviz/stats/stats.py:1645: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(

| rank | elpd_waic | p_waic | elpd_diff | weight | se | dse | warning | scale | |

|---|---|---|---|---|---|---|---|---|---|

| model_p2 | 0 | -16378.827016 | 690.666675 | 0.000000 | 0.634616 | 394.119950 | 0.000000 | True | log |

| model_l | 1 | -18016.084000 | 24.350585 | 1637.256984 | 0.365384 | 144.157057 | 420.237166 | True | log |

az.plot_compare(df_compare, insample_dev=False)

<Axes: title={'center': 'Model comparison\nhigher is better'}, xlabel='elpd_waic (log)', ylabel='ranked models'>