MOBO

Contents

MOBO#

This exercise walks through the Multi objective Bayesian Optimization using the Toy Problem.

# run only once during the notebook execution

!git clone https://github.com/cfteach/modules.git &> /dev/null

!pip install ax-platform &> /dev/null

!pip install ipyvolume &> /dev/null

!pip install plotly

Looking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/

Requirement already satisfied: plotly in /usr/local/lib/python3.7/dist-packages (5.5.0)

Requirement already satisfied: six in /usr/local/lib/python3.7/dist-packages (from plotly) (1.15.0)

Requirement already satisfied: tenacity>=6.2.0 in /usr/local/lib/python3.7/dist-packages (from plotly) (8.0.1)

%load_ext autoreload

%autoreload 2

import ipyvolume as ipv

import ipywidgets as widgets

from IPython.display import display, Math, Latex

import os

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

#import AI4NP_detector_opt.sol2.detector2 as detector2

import modules.detector2 as detector2

import re

import pickle

import dill

import torch

from ax.metrics.noisy_function import GenericNoisyFunctionMetric

from ax.service.utils.report_utils import exp_to_df #https://ax.dev/api/service.html#ax.service.utils.report_utils.exp_to_df

from ax.runners.synthetic import SyntheticRunner

# Plotting imports and initialization

from ax.utils.notebook.plotting import render, init_notebook_plotting

from ax.plot.contour import plot_contour

from ax.plot.pareto_utils import compute_posterior_pareto_frontier

from ax.plot.pareto_frontier import plot_pareto_frontier

#init_notebook_plotting()

# Model registry for creating multi-objective optimization models.

from ax.modelbridge.registry import Models

# Analysis utilities, including a method to evaluate hypervolumes

from ax.modelbridge.modelbridge_utils import observed_hypervolume

from ax import SumConstraint

from ax import OrderConstraint

from ax import ParameterConstraint

from ax.core.search_space import SearchSpace

from ax.core.parameter import RangeParameter,ParameterType

from ax.core.objective import MultiObjective, Objective, ScalarizedObjective

from ax.core.optimization_config import ObjectiveThreshold, MultiObjectiveOptimizationConfig

from ax.core.experiment import Experiment

from botorch.utils.multi_objective.box_decompositions.dominated import DominatedPartitioning

from ax.core.data import Data

from ax.core.types import ComparisonOp

from sklearn.utils import shuffle

from functools import wraps

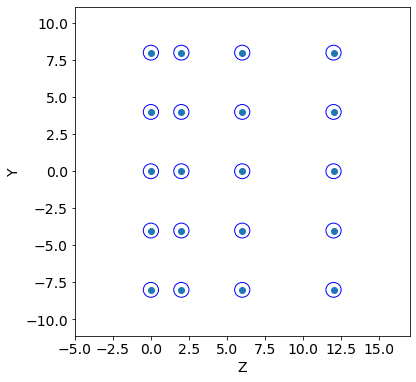

Create detector geometry and simulate tracks#

The module detector creates a simple 2D geometry of a wire based tracker made by 4 planes.

The adjustable parameters are the radius of each wire, the pitch (along the y axis), and the shift along y and z of a plane with respect to the previous one.

A total of 8 parameters can be tuned.

The goal of this toy model, is to tune the detector design so to optimize the efficiency (fraction of tracks which are detected) as well as the cost for its realization. As a proxy for the cost, we use the material/volume (the surface in 2D) of the detector. For a track to be detetected, in the efficiency definition we require at least two wires hit by the track.

So we want to maximize the efficiency (defined in detector.py) and minimize the cost.

LIST OF PARAMETERS#

(baseline values)

R = .5 [cm]

pitch = 4.0 [cm]

y1 = 0.0, y2 = 0.0, y3 = 0.0, z1 = 2.0, z2 = 4.0, z3 = 6.0 [cm]

# CONSTANT PARAMETERS

#------ define mother region ------#

y_min=-10.1

y_max=10.1

N_tracks = 1000

print("::::: BASELINE PARAMETERS :::::")

R = .5

pitch = 4.0

y1 = 0.0

y2 = 0.0

y3 = 0.0

z1 = 2.0

z2 = 4.0

z3 = 6.0

print("R, pitch, y1, y2, y3, z1, z2, z3: ", R, pitch, y1, y2, y3, z1, z2, z3,"\n")

#------------- GEOMETRY ---------------#

print(":::: INITIAL GEOMETRY ::::")

tr = detector2.Tracker(R, pitch, y1, y2, y3, z1, z2, z3)

Z, Y = tr.create_geometry()

num_wires = detector2.calculate_wires(Y, y_min, y_max)

volume = detector2.wires_volume(Y, y_min, y_max,R)

detector2.geometry_display(Z, Y, R, y_min=y_min, y_max=y_max,block=False,pause=5) #5

print("# of wires: ", num_wires, ", volume: ", volume)

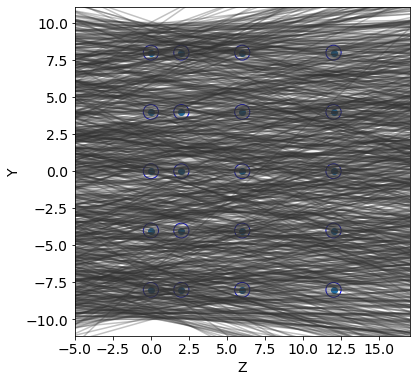

#------------- TRACK GENERATION -----------#

print(":::: TRACK GENERATION ::::")

t = detector2.Tracks(b_min=y_min, b_max=y_max, alpha_mean=0, alpha_std=0.2)

tracks = t.generate(N_tracks)

detector2.geometry_display(Z, Y, R, y_min=y_min, y_max=y_max,block=False, pause=-1)

detector2.tracks_display(tracks, Z,block=False,pause=-1)

#a track is detected if at least two wires have been hit

score = detector2.get_score(Z, Y, tracks, R)

frac_detected = score[0]

resolution = score[1]

print("fraction of tracks detected: ",frac_detected)

print("resolution: ",resolution)

::::: BASELINE PARAMETERS :::::

R, pitch, y1, y2, y3, z1, z2, z3: 0.5 4.0 0.0 0.0 0.0 2.0 4.0 6.0

:::: INITIAL GEOMETRY ::::

# of wires: 20 , volume: 62.800000000000004

:::: TRACK GENERATION ::::

fraction of tracks detected: 0.264

resolution: 0.24613882479204957

Define Objectives#

Defines a class for the objectives of the problem that can be used in the MOO.

class objectives():

def __init__(self,tracks,y_min,y_max):

self.tracks = tracks

self.y_min = y_min

self.y_max = y_max

def wrapper_geometry(fun):

def inner(self):

R, pitch, y1, y2, y3, z1, z2, z3 = self.X

self.geometry(R, pitch, y1, y2, y3, z1, z2, z3)

return fun(self)

return inner

def update_tracks(self, new_tracks):

self.tracks = new_tracks

def update_design_point(self,X):

self.X = X

def geometry(self,R, pitch, y1, y2, y3, z1, z2, z3):

tr = detector2.Tracker(R, pitch, y1, y2, y3, z1, z2, z3)

self.R = R

self.Z, self.Y = tr.create_geometry()

@wrapper_geometry

def calc_score(self):

res = detector2.get_score(self.Z, self.Y, self.tracks, self.R)

assert res[0] >= 0 and res[1] >= 0,"Fraction or Resolution negative."

return res

def get_score(self,X):

R, pitch, y1, y2, y3, z1, z2, z3 = X

self.geometry(R, pitch, y1, y2, y3, z1, z2, z3)

res = detector2.get_score(self.Z, self.Y, self.tracks, self.R)

return res

def get_volume(self):

volume = detector2.wires_volume(self.Y, self.y_min, self.y_max,self.R)

return volume

res = objectives(tracks,y_min,y_max)

#res.geometry(R, pitch, y1, y2, y3, z1, z2, z3)

X = R, pitch, y1, y2, y3, z1, z2, z3

#fscore = res.get_score(X)

res.update_design_point(X)

fscore = res.calc_score()[0]

fvolume = res.get_volume()

print("...check: ", fvolume, fscore)

...check: 62.800000000000004 0.264

Multi-Objective Optimization using BO#

Generally a Multi Objective Bayesian optimization can be summarized in the following steps

Initial Sample Generation

Generate

N_INITinital samples to explore. In our example we shall do this usingSOBOLalgorithm.Evaluate the performance of the generated samples. Use the

objectivesclass to evaluate the performace.Extract solutions that are non dominating to extract the approximated pareto front solutions.

Optmiziation loop

Train surrogate model using the observed data. In this example, we will be using a GP with

maternkernel as our surrogate model for training.Use a Acquisition function to suggest the next set of points

BATCH_SIZE. In this example, we useqNEHVIwhich uses efficientCached Box Decompositionmethods to compute the Expected Hyper Volume Increase.Evaluate the suggested points, Extract Non dominated solutions and repeat until Termination criterion is reached. In this example we shall run until

N_BATCHESis reached

More details

We will be using

ax-platform(https://ax.dev) for this exercise.In this example we will be using Multi-Objective Bayesian Optimization (MOBO) using qNEHVI + SAASBO which is defined as a

Model SetupcalledFULLYBAYESIANMOO.Predefined

Model Setupcan be found here

There are 3 Obejectives in the problem, and all objectives are minimized.

Tracking inefficiency = 1 - efficiency

Volume is taken as second objective.

We add the resolution as a third objective. The average residual of the track hit from the wire centre is used as a proxy for the resolution for this toy-model

#---------------------- BOTORCH FUNCTIONS ------------------------#

def build_experiment(search_space,optimization_config):

experiment = Experiment(

name="pareto_experiment",

search_space=search_space,

optimization_config=optimization_config,

runner=SyntheticRunner(),

)

return experiment

def glob_fun(loc_fun):

@wraps(loc_fun)

def inner(xdic):

x_sorted = [xdic[p_name] for p_name in xdic.keys()] #it assumes x will be given as, e.g., dictionary

res = list(loc_fun(x_sorted))

return res

return inner

def initialize_experiment(experiment,N_INIT):

sobol = Models.SOBOL(search_space=experiment.search_space)

experiment.new_batch_trial(sobol.gen(N_INIT)).run()

return experiment.fetch_data()

@glob_fun

def ftot(xdic):

return (1- res.get_score(xdic)[0], res.get_volume(), res.get_score(xdic)[1])

def f1(xdic):

return ftot(xdic)[0] #obj1

def f2(xdic):

return ftot(xdic)[1] #obj2

def f3(xdic):

return ftot(xdic)[2] #obj3

tkwargs = {

"dtype": torch.double,

"device": torch.device("cuda" if torch.cuda.is_available() else "cpu"),

}

# Define Hyper-parameters for the optimization

# Define Hyper-parameters for the optimization

# Current limitation from colab:

# goes off RAM limits for

#N_BATCH=50; BATCH_SIZE=5; num_samples = 128 warmup_steps = 256

N_BATCH = 30

BATCH_SIZE = 5

dim_space = 8 # len(X)

N_INIT = 2 * (dim_space + 1) #

lowerv = np.array([0.5,2.5,0.,0.,0.,2.,2.,2.])

upperv = np.array([1.0,5.0,4.,4.,4.,10.,10.,10.])

# defining the search space one can also include constraints in this function

search_space = SearchSpace(

parameters=

[RangeParameter(name=f"x{i}", lower=lowerv[i], upper=upperv[i],

parameter_type=ParameterType.FLOAT) for i in range(dim_space)]

)

print (search_space)

# define the metrics for optimization

metric_a = GenericNoisyFunctionMetric("a", f=f1, noise_sd=0.0, lower_is_better=True)

metric_b = GenericNoisyFunctionMetric("b", f=f2, noise_sd=0.0, lower_is_better=True)

metric_c = GenericNoisyFunctionMetric("c", f=f3, noise_sd=0.0, lower_is_better=True)

mo = MultiObjective(objectives=[Objective(metric=metric_a),

Objective(metric=metric_b),

Objective(metric=metric_c)

]

)

ref_point = [-1.1]*len(mo.metrics)

refpoints = torch.Tensor(ref_point).to(**tkwargs) # [1.1, 1.1, 1.1] for 3 objs

# Thresholds tries to restrict the objectives to be within the given bounds

# https://ax.dev/tutorials/multiobjective_optimization.html#Set-Objective-Thresholds-to-focus-candidate-generation-in-a-region-of-interest

objective_thresholds = [ObjectiveThreshold(metric=metric, bound=val, relative=False, op=ComparisonOp.LEQ)

for metric, val in zip(mo.metrics, refpoints) #---> this requires defining a torch.float64 object --- by default is (-)1.1 for DTLZ

]

optimization_config = MultiObjectiveOptimizationConfig(

objective=mo,

objective_thresholds=objective_thresholds

)

SearchSpace(parameters=[RangeParameter(name='x0', parameter_type=FLOAT, range=[0.5, 1.0]), RangeParameter(name='x1', parameter_type=FLOAT, range=[2.5, 5.0]), RangeParameter(name='x2', parameter_type=FLOAT, range=[0.0, 4.0]), RangeParameter(name='x3', parameter_type=FLOAT, range=[0.0, 4.0]), RangeParameter(name='x4', parameter_type=FLOAT, range=[0.0, 4.0]), RangeParameter(name='x5', parameter_type=FLOAT, range=[2.0, 10.0]), RangeParameter(name='x6', parameter_type=FLOAT, range=[2.0, 10.0]), RangeParameter(name='x7', parameter_type=FLOAT, range=[2.0, 10.0])], parameter_constraints=[])

experiment = build_experiment(search_space,optimization_config)

data = initialize_experiment(experiment,N_INIT)

hv_list = []

for i in range(N_BATCH):

print("\n\n...PROCESSING BATCH n.: {}\n\n".format(i+1))

model = Models.FULLYBAYESIANMOO(

experiment=experiment,

data=data, # tell the data

# use fewer num_samples and warmup_steps to speed up this tutorial

num_samples=32,#256

warmup_steps=64,#512

torch_device=tkwargs["device"],

verbose=False, # Set to True to print stats from MCMC

disable_progbar=False, # Set to False to print a progress bar from MCMC

)

generator_run = model.gen(BATCH_SIZE) #ask BATCH_SIZE points

trial = experiment.new_batch_trial(generator_run=generator_run)

trial.run()

data = Data.from_multiple_data([data, trial.fetch_data()]) #https://ax.dev/api/core.html#ax.Data.from_multiple_data

print("\n\n\n...calculate df via exp_to_df (i.e., global dataframe so far):\n\n")

metric_names = {index: i for index, i in enumerate(mo.metric_names)}

N_METRICS = len(metric_names)

df = exp_to_df(experiment).sort_values(by=["trial_index"])

outcomes = torch.tensor(df[mo.metric_names].values)

#outcomes, _ = data_to_outcomes(data, N_INIT, i+1, BATCH_SIZE, N_METRICS, metric_names)

partitioning = DominatedPartitioning(ref_point=refpoints, Y=outcomes)

try:

hv = partitioning.compute_hypervolume().item()

except:

hv = 0

print("Failed to compute hv")

hv_list.append(hv)

print(f"Iteration: {i+1}, HV: {hv}")

...PROCESSING BATCH n.: 1

Warmup: 0%| | 0/96 [00:00, ?it/s]/usr/local/lib/python3.7/dist-packages/pyro/infer/mcmc/adaptation.py:235: UserWarning:

torch.triangular_solve is deprecated in favor of torch.linalg.solve_triangularand will be removed in a future PyTorch release.

torch.linalg.solve_triangular has its arguments reversed and does not return a copy of one of the inputs.

X = torch.triangular_solve(B, A).solution

should be replaced with

X = torch.linalg.solve_triangular(A, B). (Triggered internally at ../aten/src/ATen/native/BatchLinearAlgebra.cpp:2183.)

Sample: 100%|██████████| 96/96 [00:10, 9.37it/s, step size=7.17e-01, acc. prob=0.711]

Sample: 100%|██████████| 96/96 [00:14, 6.73it/s, step size=4.34e-01, acc. prob=0.845]

Sample: 100%|██████████| 96/96 [00:08, 11.21it/s, step size=2.74e-01, acc. prob=0.957]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 1, HV: 887.8206680339879

...PROCESSING BATCH n.: 2

Sample: 100%|██████████| 96/96 [00:08, 11.73it/s, step size=5.27e-01, acc. prob=0.880]

Sample: 100%|██████████| 96/96 [00:09, 10.40it/s, step size=3.22e-01, acc. prob=0.948]

Sample: 100%|██████████| 96/96 [00:08, 11.10it/s, step size=4.98e-01, acc. prob=0.854]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 2, HV: 892.7562208007992

...PROCESSING BATCH n.: 3

Sample: 100%|██████████| 96/96 [00:08, 11.54it/s, step size=6.19e-01, acc. prob=0.770]

Sample: 100%|██████████| 96/96 [00:09, 10.04it/s, step size=4.94e-01, acc. prob=0.909]

Sample: 100%|██████████| 96/96 [00:11, 8.66it/s, step size=2.17e-01, acc. prob=0.971]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 3, HV: 894.4974471048765

...PROCESSING BATCH n.: 4

Sample: 100%|██████████| 96/96 [00:08, 11.05it/s, step size=4.47e-01, acc. prob=0.885]

Sample: 100%|██████████| 96/96 [00:09, 9.99it/s, step size=6.63e-01, acc. prob=0.782]

Sample: 100%|██████████| 96/96 [00:10, 8.93it/s, step size=4.00e-01, acc. prob=0.885]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 4, HV: 904.2920608526667

...PROCESSING BATCH n.: 5

Sample: 100%|██████████| 96/96 [00:07, 12.07it/s, step size=6.39e-01, acc. prob=0.812]

Sample: 100%|██████████| 96/96 [00:10, 9.44it/s, step size=3.90e-01, acc. prob=0.883]

Sample: 100%|██████████| 96/96 [00:09, 10.65it/s, step size=5.76e-01, acc. prob=0.857]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 5, HV: 906.591397014965

...PROCESSING BATCH n.: 6

Sample: 100%|██████████| 96/96 [00:09, 10.28it/s, step size=4.28e-01, acc. prob=0.865]

Sample: 100%|██████████| 96/96 [00:10, 8.75it/s, step size=2.58e-01, acc. prob=0.963]

Sample: 100%|██████████| 96/96 [00:12, 7.90it/s, step size=2.39e-01, acc. prob=0.747]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 6, HV: 906.6188873086176

...PROCESSING BATCH n.: 7

Sample: 100%|██████████| 96/96 [00:08, 11.01it/s, step size=3.20e-01, acc. prob=0.801]

Sample: 100%|██████████| 96/96 [00:09, 9.77it/s, step size=3.80e-01, acc. prob=0.909]

Sample: 100%|██████████| 96/96 [00:09, 10.13it/s, step size=5.21e-01, acc. prob=0.776]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 7, HV: 921.3127556613248

...PROCESSING BATCH n.: 8

Sample: 100%|██████████| 96/96 [00:10, 8.96it/s, step size=3.01e-01, acc. prob=0.848]

Sample: 100%|██████████| 96/96 [00:09, 9.79it/s, step size=4.88e-01, acc. prob=0.869]

Sample: 100%|██████████| 96/96 [00:09, 10.58it/s, step size=5.98e-01, acc. prob=0.782]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 8, HV: 921.729533708068

...PROCESSING BATCH n.: 9

Sample: 100%|██████████| 96/96 [00:10, 9.43it/s, step size=4.02e-01, acc. prob=0.911]

Sample: 100%|██████████| 96/96 [00:09, 9.61it/s, step size=5.83e-01, acc. prob=0.495]

Sample: 100%|██████████| 96/96 [00:09, 9.73it/s, step size=4.21e-01, acc. prob=0.797]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 9, HV: 922.0147237470662

...PROCESSING BATCH n.: 10

Sample: 100%|██████████| 96/96 [00:11, 8.54it/s, step size=3.30e-01, acc. prob=0.920]

Sample: 100%|██████████| 96/96 [00:10, 8.79it/s, step size=4.07e-01, acc. prob=0.929]

Sample: 100%|██████████| 96/96 [00:10, 9.51it/s, step size=3.20e-01, acc. prob=0.924]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 10, HV: 922.176720102533

...PROCESSING BATCH n.: 11

Sample: 100%|██████████| 96/96 [00:10, 8.85it/s, step size=3.64e-01, acc. prob=0.866]

Sample: 100%|██████████| 96/96 [00:12, 7.41it/s, step size=1.79e-01, acc. prob=0.964]

Sample: 100%|██████████| 96/96 [00:12, 7.51it/s, step size=2.63e-01, acc. prob=0.959]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 11, HV: 922.176720102533

...PROCESSING BATCH n.: 12

Sample: 100%|██████████| 96/96 [00:11, 8.32it/s, step size=5.19e-01, acc. prob=0.771]

Sample: 100%|██████████| 96/96 [00:12, 7.80it/s, step size=4.59e-01, acc. prob=0.869]

Sample: 100%|██████████| 96/96 [00:11, 8.39it/s, step size=3.96e-01, acc. prob=0.895]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 12, HV: 924.3510295488102

...PROCESSING BATCH n.: 13

Sample: 100%|██████████| 96/96 [00:12, 7.54it/s, step size=4.60e-01, acc. prob=0.734]

Sample: 100%|██████████| 96/96 [00:12, 7.39it/s, step size=3.54e-01, acc. prob=0.913]

Sample: 100%|██████████| 96/96 [00:13, 7.35it/s, step size=2.57e-01, acc. prob=0.909]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 13, HV: 924.3510295488102

...PROCESSING BATCH n.: 14

Sample: 100%|██████████| 96/96 [00:11, 8.47it/s, step size=5.37e-01, acc. prob=0.859]

Sample: 100%|██████████| 96/96 [00:13, 6.93it/s, step size=2.95e-01, acc. prob=0.952]

Sample: 100%|██████████| 96/96 [00:11, 8.32it/s, step size=3.72e-01, acc. prob=0.922]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 14, HV: 927.4192352789466

...PROCESSING BATCH n.: 15

Sample: 100%|██████████| 96/96 [00:11, 8.10it/s, step size=4.38e-01, acc. prob=0.899]

Sample: 100%|██████████| 96/96 [00:12, 7.49it/s, step size=6.97e-01, acc. prob=0.578]

Sample: 100%|██████████| 96/96 [00:12, 7.91it/s, step size=5.00e-01, acc. prob=0.894]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 15, HV: 933.084994715977

...PROCESSING BATCH n.: 16

Sample: 100%|██████████| 96/96 [00:12, 7.56it/s, step size=3.76e-01, acc. prob=0.672]

Sample: 100%|██████████| 96/96 [00:15, 6.04it/s, step size=1.74e-01, acc. prob=0.938]

Sample: 100%|██████████| 96/96 [00:14, 6.52it/s, step size=2.86e-01, acc. prob=0.956]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 16, HV: 933.6004513285546

...PROCESSING BATCH n.: 17

Sample: 100%|██████████| 96/96 [00:15, 6.33it/s, step size=5.44e-01, acc. prob=0.849]

Sample: 100%|██████████| 96/96 [00:17, 5.38it/s, step size=2.13e-01, acc. prob=0.960]

Sample: 100%|██████████| 96/96 [00:13, 7.17it/s, step size=8.07e-01, acc. prob=0.768]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 17, HV: 933.6004513285546

...PROCESSING BATCH n.: 18

Sample: 100%|██████████| 96/96 [00:14, 6.79it/s, step size=6.01e-01, acc. prob=0.591]

Sample: 100%|██████████| 96/96 [00:19, 5.03it/s, step size=1.44e-01, acc. prob=0.922]

Sample: 100%|██████████| 96/96 [00:13, 7.04it/s, step size=5.64e-01, acc. prob=0.848]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 18, HV: 933.6708948078322

...PROCESSING BATCH n.: 19

Sample: 100%|██████████| 96/96 [00:14, 6.83it/s, step size=5.95e-01, acc. prob=0.861]

Sample: 100%|██████████| 96/96 [00:15, 6.33it/s, step size=6.04e-01, acc. prob=0.833]

Sample: 100%|██████████| 96/96 [00:21, 4.39it/s, step size=1.09e-01, acc. prob=0.935]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 19, HV: 938.5741413021568

...PROCESSING BATCH n.: 20

Sample: 100%|██████████| 96/96 [00:18, 5.07it/s, step size=2.91e-01, acc. prob=0.911]

Sample: 100%|██████████| 96/96 [00:17, 5.53it/s, step size=3.19e-01, acc. prob=0.588]

Sample: 100%|██████████| 96/96 [00:16, 5.82it/s, step size=4.49e-01, acc. prob=0.238]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 20, HV: 938.5741413021568

...PROCESSING BATCH n.: 21

Sample: 100%|██████████| 96/96 [00:18, 5.13it/s, step size=3.84e-01, acc. prob=0.840]

Sample: 100%|██████████| 96/96 [00:18, 5.22it/s, step size=3.22e-01, acc. prob=0.662]

Sample: 100%|██████████| 96/96 [00:23, 4.04it/s, step size=1.16e-01, acc. prob=0.951]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 21, HV: 939.5469992013661

...PROCESSING BATCH n.: 22

Sample: 100%|██████████| 96/96 [00:23, 4.14it/s, step size=1.37e-01, acc. prob=0.855]

Sample: 100%|██████████| 96/96 [00:17, 5.36it/s, step size=4.45e-01, acc. prob=0.862]

Sample: 100%|██████████| 96/96 [00:20, 4.71it/s, step size=2.65e-01, acc. prob=0.780]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 22, HV: 942.9785128794558

...PROCESSING BATCH n.: 23

Sample: 100%|██████████| 96/96 [00:16, 5.68it/s, step size=5.38e-01, acc. prob=0.748]

Sample: 100%|██████████| 96/96 [00:19, 4.84it/s, step size=2.62e-01, acc. prob=0.955]

Sample: 100%|██████████| 96/96 [00:19, 5.02it/s, step size=3.09e-01, acc. prob=0.890]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 23, HV: 942.9785128794559

...PROCESSING BATCH n.: 24

Sample: 100%|██████████| 96/96 [00:16, 5.73it/s, step size=6.32e-01, acc. prob=0.722]

Sample: 100%|██████████| 96/96 [00:21, 4.49it/s, step size=2.11e-01, acc. prob=0.850]

Sample: 100%|██████████| 96/96 [00:18, 5.07it/s, step size=5.07e-01, acc. prob=0.688]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 24, HV: 943.7649958874345

...PROCESSING BATCH n.: 25

Sample: 100%|██████████| 96/96 [00:24, 3.93it/s, step size=1.46e-01, acc. prob=0.985]

Sample: 100%|██████████| 96/96 [00:19, 4.97it/s, step size=4.26e-01, acc. prob=0.876]

Sample: 100%|██████████| 96/96 [00:21, 4.53it/s, step size=2.68e-01, acc. prob=0.726]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 25, HV: 946.4383626494581

...PROCESSING BATCH n.: 26

Sample: 100%|██████████| 96/96 [00:18, 5.09it/s, step size=6.03e-01, acc. prob=0.923]

Sample: 100%|██████████| 96/96 [00:20, 4.73it/s, step size=5.02e-01, acc. prob=0.899]

Sample: 100%|██████████| 96/96 [00:19, 4.86it/s, step size=6.46e-01, acc. prob=0.433]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 26, HV: 946.7074603531958

...PROCESSING BATCH n.: 27

Sample: 100%|██████████| 96/96 [00:20, 4.77it/s, step size=3.76e-01, acc. prob=0.924]

Sample: 100%|██████████| 96/96 [00:22, 4.33it/s, step size=2.68e-01, acc. prob=0.963]

Sample: 100%|██████████| 96/96 [00:30, 3.20it/s, step size=6.42e-02, acc. prob=0.982]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 27, HV: 946.7074603531956

...PROCESSING BATCH n.: 28

Sample: 100%|██████████| 96/96 [00:21, 4.45it/s, step size=6.31e-01, acc. prob=0.780]

Sample: 100%|██████████| 96/96 [00:29, 3.20it/s, step size=2.26e-01, acc. prob=0.952]

Sample: 100%|██████████| 96/96 [00:25, 3.78it/s, step size=3.75e-01, acc. prob=0.939]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 28, HV: 947.0862971253677

...PROCESSING BATCH n.: 29

Sample: 100%|██████████| 96/96 [00:25, 3.79it/s, step size=2.51e-01, acc. prob=0.927]

Sample: 100%|██████████| 96/96 [00:32, 2.91it/s, step size=2.45e-01, acc. prob=0.867]

Sample: 100%|██████████| 96/96 [00:44, 2.14it/s, step size=5.16e-01, acc. prob=0.919]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 29, HV: 947.0862971253675

...PROCESSING BATCH n.: 30

Sample: 100%|██████████| 96/96 [00:23, 4.09it/s, step size=2.72e-01, acc. prob=0.946]

Sample: 100%|██████████| 96/96 [00:22, 4.28it/s, step size=4.44e-01, acc. prob=0.917]

Sample: 100%|██████████| 96/96 [00:28, 3.40it/s, step size=2.27e-01, acc. prob=0.866]

...calculate df via exp_to_df (i.e., global dataframe so far):

Iteration: 30, HV: 947.0862971253675

Analysis of Results#

Inspecting the Hyper volume statistics#

import plotly.express as px

fig = px.scatter(x = np.arange(N_BATCH) + 1, y = hv_list,

labels={"x": "N_BATCHES",

"y": "Hyper Volume"},

width = 800, height = 800,

title = "HyperVolume Improvement", )

fig.update_traces(marker=dict(size=8,

line=dict(width=2,

color='DarkSlateGrey')),

selector=dict(mode='marker+line'))

fig.data[0].update(mode = "markers+lines")

fig.show()

Overall Performance in the Objective space.#

fig1 = px.scatter_3d(df, x="a", y="b", z = "c", color = "trial_index",

labels = { "a": "InEfficiency",

"b": "Volume",

"c": "Resolution"

}, hover_data = df.columns,

height = 800, width = 800)

fig1.show()

Exploration as a function of Iteration number#

import plotly.graph_objects as go

frames = []

for trial in df.trial_index.unique():

tmp_df = df[df.trial_index <= trial]

frames.append(go.Frame(data = [

go.Scatter3d(x = tmp_df.a, y = tmp_df.b, z = tmp_df.c,

marker = dict(cmin = df.trial_index.min(),

cmax = df.trial_index.max(),

color = tmp_df.trial_index),

mode = "markers")

],

layout = go.Layout(title_text = "Trial index : {}".format(trial))))

fig = go.Figure(

data=[go.Scatter3d(x = df.a, y = df.b, z = df.c,

marker = dict(cmin = df.trial_index.min(),

cmax = df.trial_index.max(),

color = df.trial_index),

mode = "markers")

],

layout=go.Layout(

xaxis=dict(range=[0., 0.6], autorange=False),

yaxis=dict(range=[0., 400.], autorange=False),

updatemenus=[dict(

type="buttons",

buttons=[dict(label="Play",

method="animate",

args=[None]),

{

"args": [[None], {"frame": {"duration": 0, "redraw": False},

"mode": "immediate",

"transition": {"duration": 0}}],

"label": "Pause",

"method": "animate"

}])],

width = 800, height = 700

),

frames=frames

)

fig.update_layout(

scene = dict(xaxis = dict(range=[0.,0.6]),

yaxis = dict(range=[0., 400.],),

zaxis = dict(range=[0., 0.6]),

xaxis_title = "InEfficiency",

yaxis_title = "Volume",

zaxis_title = "Resolution")

)

fig.update()

fig.show()

Validating the Surrogate model’s performance.#

Since the model is trained on objectives, One can perform k-fold validation to see the performance of the surrgoate model’s prediction

from ax.modelbridge.cross_validation import cross_validate

from ax.plot.diagnostic import tile_cross_validation

#https://ax.dev/api/_modules/ax/modelbridge/cross_validation.html

cv = cross_validate(model, folds = 5)

render(tile_cross_validation(cv))

Computing posterior pareto frontiers.#

One can sample expected approximate pareto front solution from the built surrogate model.

from ax.core import metric

# https://ax.dev/api/plot.html#ax.plot.pareto_utils.compute_posterior_pareto_frontier

# absolute_metrics – List of outcome metrics that should NOT be relativized w.r.t. the status quo

# (all other outcomes will be in % relative to status_quo).

# Note that approximated pareto frontier is can be visualized only against 2 objectives.

# So one can try to make mixed plots, to see the ``

n_points_surrogate = 25

frontier = [] #(a,b), (a,c), (b,c)

metric_combos = [(metric_a, metric_b), (metric_a, metric_c), (metric_b, metric_c)]

for combo in metric_combos:

print ("computing pareto frontier : ", combo)

frontier.append(compute_posterior_pareto_frontier(

experiment=experiment,

data=experiment.fetch_data(),

primary_objective=combo[0], #_b

secondary_objective=combo[1], #_a

absolute_metrics=["a", "b", "c"],

num_points=n_points_surrogate,

))

#render(plot_pareto_frontier(frontier, CI_level=0.9))

#res_front = plot_pareto_frontier(frontier, CI_level=0.8)

computing pareto frontier : (GenericNoisyFunctionMetric('a'), GenericNoisyFunctionMetric('b'))

computing pareto frontier : (GenericNoisyFunctionMetric('a'), GenericNoisyFunctionMetric('c'))

computing pareto frontier : (GenericNoisyFunctionMetric('b'), GenericNoisyFunctionMetric('c'))

print ("Metric_a, Metric_b")

render(plot_pareto_frontier(frontier[0], CI_level=0.8))

Metric_a, Metric_b

print ("Metric_a, Metric_c")

render(plot_pareto_frontier(frontier[1], CI_level=0.8))

Metric_a, Metric_c

print ("Metric_b, Metric_c")

render(plot_pareto_frontier(frontier[2], CI_level=0.8))

Metric_b, Metric_c

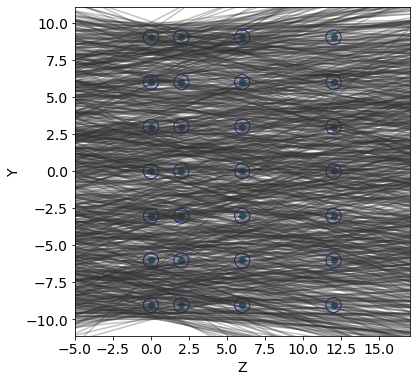

Results from the computed Pareto front.#

# Extract performance of the pareto front, 3 in here frontier[s] s = [a, b, c]

def plot_from_pareto(frontier, index):

'''

Input: ParetoFrontierResults, enquiry index to plot

'''

params = [frontier.param_dicts[index]['x{}'.format(i)] for i in range(8)]

metric_names = frontier.absolute_metrics

pred_means = [frontier.means[metric][index] for metric in metric_names]

R = params[0]; pitch = params[1]

Y = params[2:5]; Z = params[5:]

param_names = ["R", "pitch", "y1", "y2", "y3", "z1", "z2", "z3"]

print("##### Parameters #####\n")

for p in range(len(params)):

print ("{} : {}\n".format(param_names[p], params[p]))

print("######################\n")

tr = detector2.Tracker(R, pitch, y1, y2, y3, z1, z2, z3)

Z, Y = tr.create_geometry()

res.update_design_point(np.array(params))

detector2.geometry_display(Z, Y, R, y_min=y_min, y_max=y_max,block=False, pause=-1)

detector2.tracks_display(tracks, Z,block=False,pause=-1)

score = detector2.get_score(Z, Y, tracks, R)

frac_detected = score[0]

resolution = score[1]

fvolume = detector2.wires_volume(Y, y_min, y_max, R)

print ("\n#####Summary of Comparison#####\n")

print ("Caculated InEff : ", 1. - frac_detected, "posterior InEff : ", pred_means[0], "\n")

print ("Caculated Volume : ", fvolume, "posterior Volume : ", pred_means[1], "\n")

print ("Caculated resolution : ", resolution, "posterior resolution : ", pred_means[2], "\n")

plot_from_pareto(frontier[1], 24)

##### Parameters #####

R : 0.5

pitch : 3.009258888262197

y1 : 3.3494196053302043

y2 : 2.0216309930584533

y3 : 0.257109372083595

z1 : 7.376016115478601

z2 : 5.022381000387314

z3 : 2.0009209309114238

######################

#####Summary of Comparison#####

Caculated InEff : 0.616 posterior InEff : 0.5645657816611145

Caculated Volume : 87.92 posterior Volume : 89.53302552311727

Caculated resolution : 0.25079614840949394 posterior resolution : 0.23012146381186147

Exercise 3#

Determine the Pareto set from the 3D front and choose an optimal point

Plot the optimal configuration of the tracker corresponding to that point

Do analysis of convergence

Visualize the point with a radar or petal diagram, following https://pymoo.org/visualization/index.html