Developed Pipeline

Contents

Developed Pipeline#

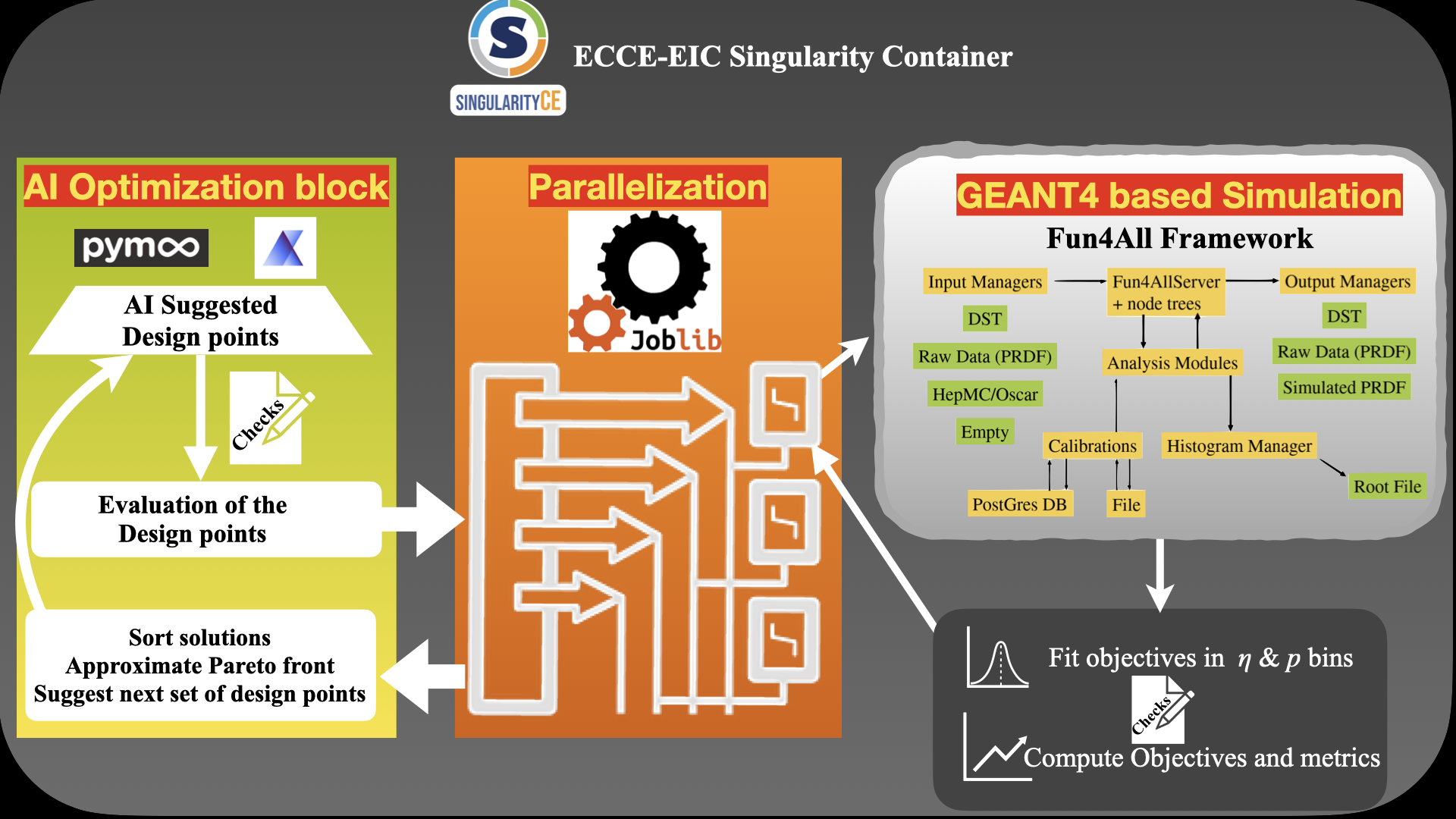

As shown in Fig. 2 a typical workflow to optimize detector design, we have all the pieces.

With the detailed explanations from previous sections on different pieces of the pipeline this section summarizes the complete pipeline.

Fun4All - detector response simulator#

Fun4All framework (based on GEANT4) was used to simulate the detector response of a given design solution.

The existing framework of Fun4All had to be modified to incorporate the dynamically changing detector dimensions (to incoporate the parameterizaton). Therefore, separate version of Fun4All framework was maintained for this project. However, the latter was always updated to reflect the most up-to-date version of Fun4All framework.

The optimization plugin#

Currently, the framework was developed to support 2 types of optimization libraries in python

MOO using EA#

Multi-objective optimization using pymoo [3] framework (specifically NSGA-II workflow).

Summary of parameters used in this pipeline is shown below.

description |

symbol |

value |

Remarks |

|---|---|---|---|

population size |

N |

100 |

Introduce reference gene |

# objectives |

M |

3 (2) |

More details in [2] |

# Offspring |

O |

30 |

- |

Design size |

D |

9(11) |

More details in [2] |

# Calls (tot. Budget) |

- |

200 |

- |

# Cores (1st Level) |

- |

Same as Offspring |

Pipeline deploys a |

# Cores for detector simualtion |

- |

4 |

Second level of parallelisation |

# No of \(\pi^{-}\) tracks |

\(N_{track}\) |

120k |

- |

MOO using BO#

Multi-objective Bayesian optimizing using Ax framework (qNEHVI + SAASBO).

Summary of the parameters used in this pipeline is shown below

description |

symbol |

value |

Remarks |

|---|---|---|---|

population size |

N |

100 |

Introduce reference gene |

# objectives |

M |

3 (2) |

More details in [2] |

# Offspring |

O |

30 |

- |

Design size |

D |

9(11) |

More details in [2] |

# Calls (tot. Budget) |

- |

200 |

- |

# Cores (1st Level) |

- |

Same as Offspring |

Pipeline deploys a |

# Cores for detector simualtion |

- |

4 |

Second level of parallelisation |

# No of \(\pi^{-}\) tracks |

\(N_{track}\) |

120k |

- |

Checks on design point#

Each time the optimization algorithm suggests a point, a series of checks are run before and after the its simulation. This is to ensure not only the feasiblity of the design solution but also to the checks serve as a QA test of the simulated sample. The details of the checks are outline in the figure below.

Putting it all together#

Putting all the pieces together, The overall pipeline is summarized below.

Fig. 8 The modular pipeline for optimizing the EIC tracker.#